That 3 AM Search for a Safe Space

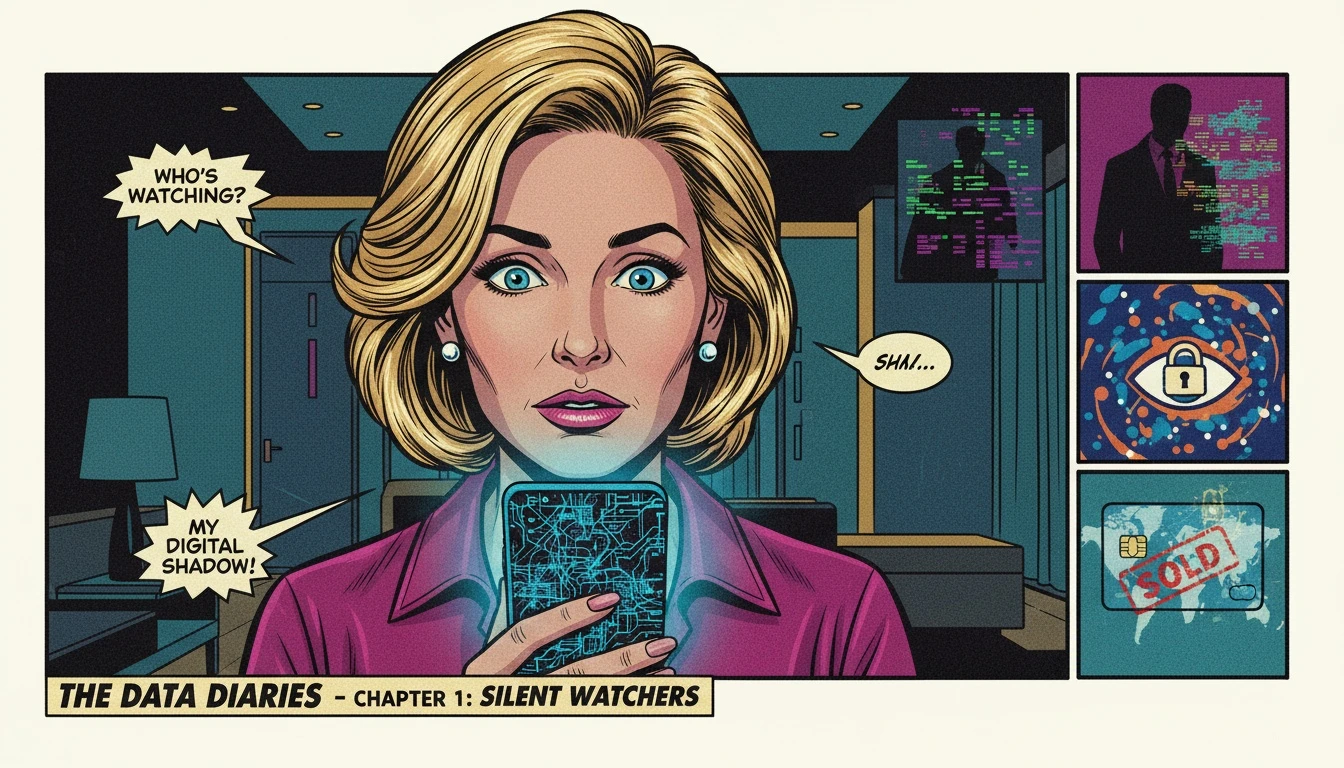

It’s late. The house is quiet, but your mind isn’t. There’s a weight on your chest that feels too heavy to carry alone, yet too messy to share with a friend. So you open a browser, the blue light of the screen illuminating the room, and you type the words: 'free ai therapist'.

The appeal is immediate and powerful: a confidential space, no judgment, no scheduling conflicts, and no cost. It promises a digital confessional where you can unpack your darkest thoughts without fear. But a flicker of doubt crosses your mind. In a world where every click is tracked, how safe is that confessional, really? This is the central tension of modern mental healthcare.

The Fear is Valid: 'What Happens to My Data?'

Let’s take a deep breath right here. If you’re feeling a knot of anxiety about mental health app data privacy, that isn’t paranoia—it’s wisdom. It is a profoundly brave act to type your vulnerabilities into a chat window. Entrusting your story to anyone, or anything, requires immense courage.

Your question, 'is ai therapy confidential?', isn't just a technical one; it's an emotional one. It’s about feeling secure enough to be truly honest. When you're considering a free ai therapist, you're searching for a safe harbor. It’s completely natural and right to check if that harbor is truly protected from storms. That hesitation you feel is your mind's beautiful way of protecting your heart.

Decoding the Fine Print: Red Flags in AI Privacy Policies

Alright, let's perform some reality surgery. Most privacy policies are designed to be confusing. They use soft language to mask harsh realities. They bank on you not reading them. So let's talk about the hard truth behind the corporate jargon.

When a policy mentions sharing your 'anonymized data' with 'third-party partners' for 'service improvement,' here's the translation: your deeply personal conversations, stripped of your name, could be used to train other AIs, sold to data brokers, or analyzed for marketing insights. The risks of using AI for mental health are not just theoretical; they are built into the business models of many platforms.

And the big question—'can ai therapist report you?'—is complicated. While most platforms are not mandatory reporters like human therapists, their policies often include clauses allowing them to share information if they believe there's a risk of harm. The definition of 'harm' can be dangerously vague. This is where AI therapy chatbot privacy concerns become critical. As the American Psychological Association points out, the landscape of mental health apps is vast and largely unregulated, demanding user vigilance.

Don't let the promise of anonymous ai chat therapy lull you into a false sense of security. A free ai therapist isn't free; you're often paying with your data.

Your 5-Step Safety Checklist Before You Start Chatting

Feeling overwhelmed is not the goal. Empowerment is. You need a strategy to vet any digital mental health tool. Before you share a single word, execute this five-step action plan to protect yourself. This is how you reclaim control.

Step 1: Hunt for HIPAA Compliance.

The Health Insurance Portability and Accountability Act (HIPAA) is the US gold standard for protecting sensitive health information. Search the app's website for the word 'HIPAA'. If a service is a HIPAA compliant AI chatbot, they will advertise it loudly. Its absence is a major red flag.

Step 2: Interrogate the Privacy Policy.

Don't just skim it. Use 'Ctrl+F' to search for keywords like 'share,' 'affiliates,' 'advertising,' and 'third-party.' Pay close attention to what they say about how your data is used. Vague language is a warning sign. A trustworthy free ai therapist should be transparent.

Step 3: Confirm Chatbot Data Encryption.

The platform must use end-to-end encryption. This means that only you and the AI can, in theory, see your messages. If they don't explicitly mention 'end-to-end' or 'AES 256-bit' encryption, assume your conversations are vulnerable.

Step 4: Verify Your Right to Be Forgotten.

Check if you can easily and permanently delete your account and all associated chat history. Some platforms make this incredibly difficult. You should have the power to erase your presence completely. The best option for a free ai therapist is one that respects your autonomy.

Step 5: Follow the Money.

If the service is completely free with no premium option, ask yourself how they are making money. Often, the answer is data monetization. A transparent business model (like a subscription for advanced features) is often a better sign for mental health app data privacy than a completely free ai therapist that feels too good to be true.

FAQ

1. Is my conversation with a free AI therapist truly confidential?

It depends entirely on the platform's privacy policy and technology. While some services offer end-to-end encryption, many do not. 'Anonymized' data can often be shared with third parties for research or marketing. Always read the privacy policy carefully to understand the real level of confidentiality.

2. Can an AI therapist report me to the authorities?

Unlike licensed human therapists who are mandatory reporters, AI platforms generally are not. However, most services have a 'Terms of Service' clause that allows them to share your data with law enforcement if they detect a credible threat of harm to yourself or others. The definition of 'credible threat' can be ambiguous.

3. What are the biggest privacy risks of using AI for mental health?

The primary risks involve your sensitive data being sold, shared with third-party advertisers, or used to train commercial AI models without your explicit consent. There is also the risk of data breaches, where your personal conversations could be exposed. Choosing a free AI therapist requires careful vetting of their data security practices.

4. How can I find a HIPAA compliant AI chatbot?

Look for services that explicitly state they are 'HIPAA compliant' on their homepage or in their security documentation. These platforms are legally bound by stricter privacy and security rules. They are more common in paid, professional-grade services than in completely free consumer apps.

References

apa.org — What You Need to Know About Your Mental Health App’s Data Privacy