The Temptation of the 'Do-It-All' AI: Can ChatGPT Really Be Your Therapist?

It’s 2 AM. The house is quiet, the blue light from your phone is the only thing illuminating the room, and your thoughts are racing. You open a familiar browser tab: ChatGPT. It’s smart, it’s always available, and it doesn't carry the weight of judgment. The urge to type out what’s really on your mind is powerful.

Let’s pause here and acknowledge something important. As our emotional anchor Buddy would say, that impulse is not foolish or lazy; it’s profoundly resourceful. It comes from a brave place—a desire to be heard and a need for a safe harbor when the internal seas get rough. Turning to a tool that has helped you draft emails or plan vacations feels like a logical next step.

And for some things, it’s brilliant. It can summarize articles on anxiety or offer generic, encouraging phrases. It’s a powerful generalist. But the conversation changes entirely when the query shifts from ‘What are five symptoms of burnout?’ to ‘I think I’m burning out, and I feel like I’m failing everyone.’

The question of using ChatGPT as a therapist isn't just about technology; it's about vulnerability. You are bringing the most delicate parts of your experience to the screen. That's why asking whether a generalist tool is the right one for the specialized work of mental health is not just smart, it's a critical act of self-protection. The landscape of ai therapy is evolving, and knowing your tools is key.

Under the Hood: The Critical Differences in AI Architecture

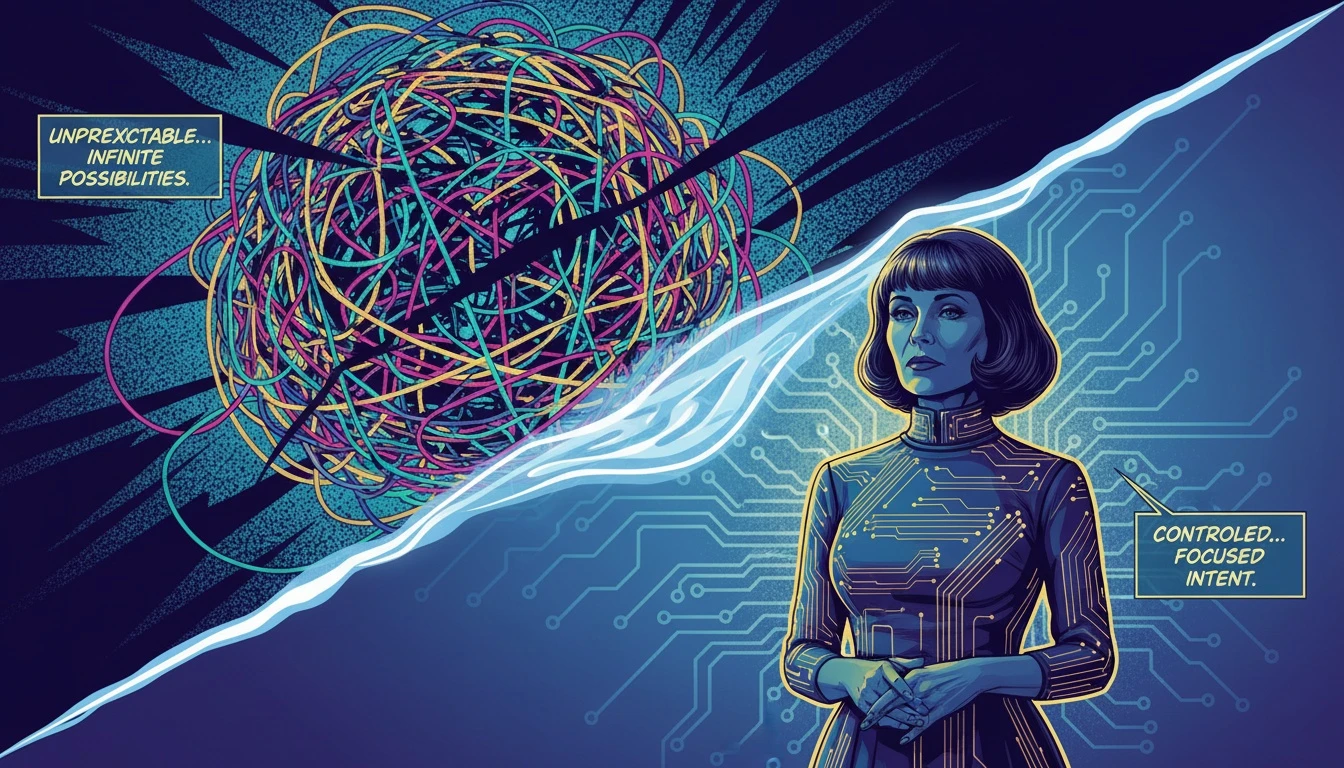

To understand the difference between asking ChatGPT for help and engaging with a dedicated ai therapy platform, we need to look at the underlying mechanics. As our systems analyst Cory puts it, 'This isn't about one AI being ‘smarter.’ It's about architecture and purpose. A hammer and a scalpel are both tools, but you wouldn't use them interchangeably.'

The fundamental distinction is the AI model fine-tuning process. A general Large Language Model (LLM) like ChatGPT is trained on a vast, unfathomable dataset from the public internet—everything from Reddit threads and poetry to scientific papers and computer code. Its goal is to be a plausible conversationalist on any topic.

In contrast, therapeutically-aligned AI models undergo a second, highly specialized training phase. They are 'fine-tuned' on curated datasets of anonymized therapeutic dialogues, clinical psychology textbooks, and evidence-based manuals. This process doesn't just teach the AI what to say; it teaches it how to listen, how to reflect, and what not to say.

This leads to the most crucial difference: safety. General LLMs are known for 'large language model hallucinations'—instances where they state falsehoods with complete confidence. As one MIT Technology Review article notes, while useful, ChatGPT is not a therapist and lacks the guardrails to manage complex emotional states. The limitations of ChatGPT for therapy become glaringly obvious when it might accidentally validate a harmful cognitive distortion or offer dangerously naive advice.

Finally, there's the issue of data privacy on ChatGPT. When you share your deepest fears, are they being used to train the general model for the next user? Specialized platforms are built with a therapeutic alliance in mind, prioritizing encrypted, confidential containers for your data. They are designed to be a private space, not a public training ground.

Here's Cory's permission slip: You have permission to demand a tool that was architected from the ground up with your psychological safety as its primary function. This is a core principle of effective ai therapy.

Choosing the Right Tool for the Job: When to Use Each AI

Navigating your mental wellness is a strategic act. You have to deploy the right resources at the right time to protect your peace and foster growth. Our strategist Pavo would frame it this way: 'Don’t bring a knife to a gunfight, and don't use a sledgehammer for surgery. Let’s create a clear action plan for your digital toolkit.'

Understanding the specific use case for each AI model moves you from being a passive user to an empowered strategist. Here is the move for deciding between a general LLM and a specialized ai therapy tool.

Use Case 1: ChatGPT (The Brainstorming Assistant)

When to use it: For depersonalized, information-gathering tasks. Think of it as a research assistant. Examples include summarizing articles about attachment theory, creating a list of journaling prompts, or drafting a formal email to request mental health leave from work.

The Goal: Efficiency and content generation. You are leveraging its broad knowledge base for non-personal tasks.

The Boundary: Avoid deep, personal introspection or seeking guidance during a crisis. Its primary design is not for emotional processing, and this is where its limitations pose a risk.

Use Case 2: Specialized AI Therapy (The Emotional Gymnasium)

When to use it: For structured, personal emotional work. This is your safe space for practice and reflection. Use it to identify and challenge cognitive distortions, practice boundary-setting scripts, track your mood patterns, or engage with guided exercises from Cognitive Behavioral Therapy (CBT).

The Goal: Safe self-reflection and therapeutic skill-building. The best LLM for therapy is one specifically designed for it.

Pavo’s Script: Instead of asking a general AI, 'Why am I so anxious?', a more strategic approach with a specialized tool would be: 'I'm experiencing anxiety. Can we walk through a CBT exercise to examine the thoughts behind this feeling?' This is a proactive step in your ai therapy journey, not just a passive query.

FAQ

1. Is it safe to use ChatGPT for therapy?

While it can be a useful tool for general queries, it is not designed for therapy. Key risks of using it for ai therapy include data privacy concerns, the potential for harmful or inaccurate advice ('hallucinations'), and a lack of specialized safety guardrails for mental health crises.

2. What does 'fine-tuning' mean for an AI therapy model?

Fine-tuning is a secondary training process where a general large language model is further trained on a specific, curated dataset. For ai therapy, this means using anonymized therapeutic conversations, psychology textbooks, and clinical guidelines to teach the AI to be more empathetic, safe, and aligned with evidence-based practices like CBT or DBT.

3. Can AI therapy replace a human therapist?

Currently, ai therapy is best viewed as a supplemental tool, not a replacement. It offers accessibility and 24/7 support for managing daily stressors and practicing skills. However, it cannot replace the nuanced understanding, human connection, and complex case management a licensed human therapist provides.

4. What's the main advantage of a specialized therapy AI over a general one?

The main advantage is purpose-built safety and effectiveness. Specialized models are fine-tuned on therapeutically-aligned data, have specific guardrails to prevent harmful advice, offer better data privacy protocols, and often include features like session memory to track your progress over time, making for a more coherent ai therapy experience.

References

technologyreview.com — ChatGPT is no therapist. But it can be a useful tool.

reddit.com — What AI tool is best for AI therapy and why?