The Promise and the Pain of AI Therapy

It’s 2 AM. The house is quiet. You’ve just spent an hour typing out the intricate, tangled history of a relationship into a chat window. For the first time, you feel a sense of clarity, a flicker of relief. The AI on the other side is patient, non-judgmental, and surprisingly insightful. You think you’ve found a viable tool, maybe even a new kind of support system.

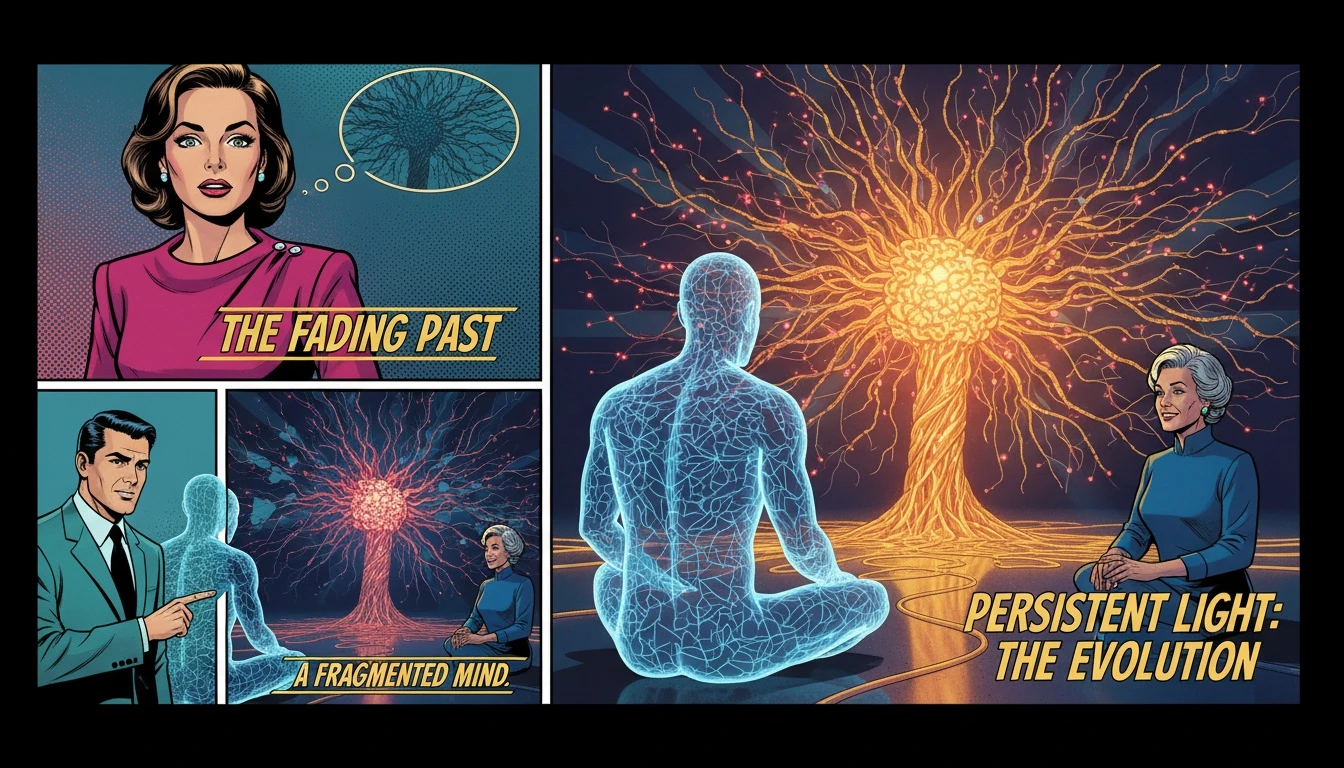

Then you log in the next day. You reference the conversation, the breakthrough you had just hours before, and you’re met with a blank slate. A polite, generic response that has no recollection of your vulnerability. The AI has forgotten you. That specific, hollow feeling isn’t just a technical glitch; it’s a profound emotional disconnect that underscores the core of the `chatgpt for therapy limitations`.

The 'Memory Wipe' Problem: When Your AI Forgets You

Let's sit with that feeling for a moment. It feels like a small betrayal, doesn't it? Even from a machine. You offered up a piece of your story, a vulnerable part of yourself, and it was simply discarded. Our emotional anchor, Buddy, would validate this immediately: That wasn't just a lost chat log; that was your brave attempt at connection feeling invalidated.

This is the single biggest failure of using a general-purpose model for mental health support. Therapy, whether with a human or a well-designed `ai therapist online`, is built on a foundation of continuity. Progress comes from building on past conversations, from tracking patterns over time. When you have to re-explain your trauma, your triggers, or your triumphs every single time, you're not moving forward. You're stuck in an endless loop of introductions.

This isn't about being sentimental; it's about the mechanics of healing. An effective therapeutic tool needs an `ai chatbot with long term memory`. Without it, the system can't recognize your growth, notice recurring negative thought cycles, or remind you of your own stated goals. It fundamentally lacks the context to provide anything more than surface-level advice, forcing you into constant, exhausting `prompt engineering for therapy` just to get back to square one.

Generalist vs. Specialist: The AI Architecture That Matters

Our sense-maker, Cory, would urge us to look at the underlying pattern. He’d say, "This isn't a flaw in the AI's character; it's a function of its design." A generalist Large Language Model (LLM) like ChatGPT is an incredible feat of engineering, designed to be a jack-of-all-trades. It can write a poem, debug code, and plan a vacation. But it is not a specialist.

A purpose-built `ai therapist online` is fundamentally different. It operates on `fine-tuned language models for mental health`. This means it has been specifically trained on datasets relevant to psychology, cognitive behavioral therapy, and empathetic dialogue. More importantly, it's built with a completely different architecture. It includes persistent memory layers—an `ai that remembers past conversations` by design, not by chance.

This structural difference is crucial for safety and efficacy. A specialized `ai therapist online` has guardrails that a general model lacks. As one psychologist noted in an article for `[Business Insider](https://www.businessinsider.com/psychologist-explains-why-chatgpt-is-not-therapist-2023-5

FAQ

1. Can AI truly replace a human therapist?

Currently, a specialized ai therapist online is best viewed as a powerful support tool, not a full replacement. It offers 24/7 accessibility, a judgment-free space for initial exploration, and help with specific techniques like CBT. However, it cannot replicate the nuanced, embodied presence and deep relational connection of a human professional, which is critical for complex trauma and severe mental health conditions.

2. What makes a good AI therapist different from ChatGPT?

The three key differentiators are: 1) Long-term memory, allowing it to recall past conversations and track your progress. 2) Specialization, meaning it's built on fine-tuned language models for mental health, providing more relevant and safer responses. 3) Privacy, ensuring your conversations are secure, encrypted, and not used for broad model training.

3. Is it safe to talk to an AI about my mental health?

Safety depends entirely on the platform you choose. Using a general-purpose AI like ChatGPT has risks, as your data may be used for training and the model lacks clinical safety guardrails. A reputable, specialized ai therapist online should offer end-to-end encryption, a clear privacy policy, and be designed with clinical oversight to handle sensitive topics appropriately.

4. How can I tell if an AI chatbot has long-term memory?

First, check the platform's features list; they will almost always advertise it as a key benefit. During a trial, explicitly test it. Share a specific detail or goal in one session, and then reference it in a new session a day or two later. A good AI with memory will seamlessly integrate that past information into the new conversation.

References

businessinsider.com — I'm a Psychologist Who Uses ChatGPT. Here's Why You Shouldn't Use It as a Therapist.

reddit.com — I made an AI therapist using LangChain to improve upon ChatGPT's memory limitations