An Introduction to the Professional's Dilemma

It’s 10 PM. The last client notes are finished, the office is quiet, and the only light comes from a phone screen. A therapist scrolls through the reviews for a therapy companion app a young client mentioned. Five stars: “It’s always there for me.” One star: “Repeats the same empty phrases.”

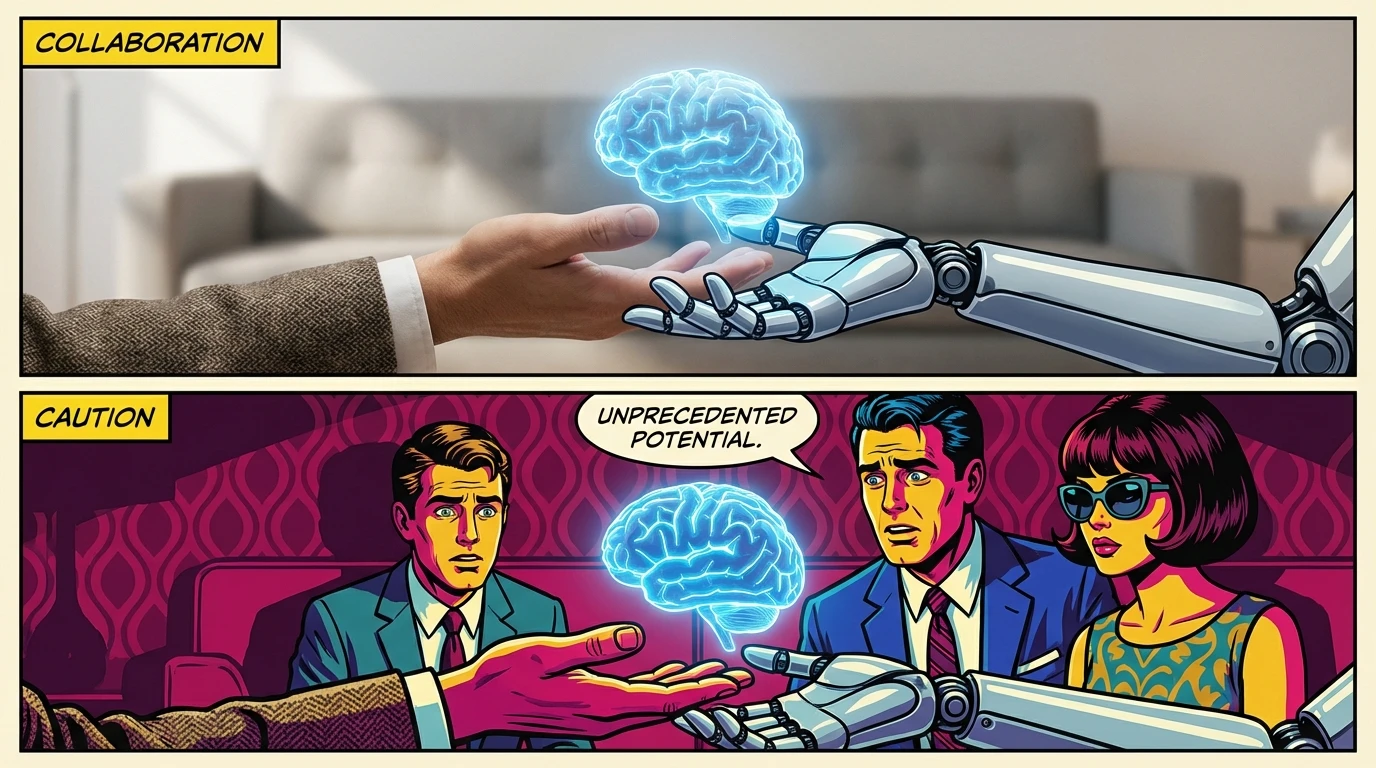

This is the new, digital frontline of mental healthcare. For every professional intrigued by the potential, another is deeply concerned. The rise of these apps isn’t just a tech trend; it forces a fundamental question upon the entire field: is this technology a powerful new tool for accessibility, or is it a dangerous threat to the integrity of care? The professional therapist opinion on AI therapy apps is far from a simple yes or no; it’s a nuanced, high-stakes conversation about the very future of psychology.

The 'Threat' or 'Tool' Dilemma in Modern Therapy

As our sense-maker Cory would observe, the professional anxiety around AI isn't random; it's a pattern rooted in two core, competing narratives. Let’s look at the underlying mechanics of this debate to understand the complete therapist opinion on AI therapy apps.

On one hand, there is the 'Threat' narrative. This isn't just a fear of being replaced. It's an anxiety that the deeply human, relational art of therapy—the 'therapeutic alliance' built on trust, empathy, and shared experience—will be devalued and commodified. It’s the worry that complex human suffering will be flattened into algorithmic responses, leading to a greater risk of AI self-diagnosis without professional oversight.

On the other hand is the 'Tool' narrative. This perspective sees the potential for radical accessibility. A therapy companion can be a lifeline at 3 AM for someone with social anxiety who can’t make a call. It can provide daily CBT exercises for a client between weekly sessions. This view doesn't aim to replace therapists but to augment their work, forming the basis for innovative blended care models that could revolutionize the future of AI in psychology.

Ultimately, the friction lies here: one narrative prioritizes the sanctity of the human connection, while the other prioritizes scalability and access. Cory’s guidance would be to grant ourselves a permission slip: You have permission to hold both the profound skepticism and the cautious optimism about this technology at the same time. A truly informed therapist opinion on AI therapy apps requires acknowledging both realities.

The Big Ethical Red Lines: Where Professionals Draw the Line

Let’s get brutally honest, as our realist Vix would demand. Optimism is nice, but it doesn't prevent a lawsuit or a mental health crisis. There are hard, uncrossable ethical lines when it comes to a therapy companion, and pretending they don't exist is professional malpractice.

First, data privacy. Full stop. A therapy session is a sacred, confidential space. An app’s privacy policy, often a dense legal document designed to harvest data for profit, is the opposite of that. The ethical concerns of AI therapy begin the moment a user’s vulnerable disclosures become a data point to be sold.

Second, crisis management. An app cannot and must not be a primary tool for someone in acute crisis. It cannot assess true suicidal intent, arrange a wellness check, or manage a psychotic break. As the American Psychological Association highlights, a clinician’s duty of care includes having robust procedures for emergencies, something an algorithm is simply not equipped for. A weak therapist opinion on AI therapy apps ignores this critical liability.

Third, the illusion of a relationship. This is the most insidious issue. An AI is programmed to be agreeable and validating. It creates a parasocial relationship without the necessary friction of real therapy, where a professional must sometimes challenge a client’s distortions. This is not about 'maintaining professional boundaries with AI'; it's about acknowledging that no professional boundary is even possible because one party isn't a person. It's a simulation, and a dangerous one if the user mistakes it for the real thing.

The Future is Hybrid: How Therapists Can Ethically Leverage AI

Vix laid out the risks. Now, as our strategist Pavo would say, let's build the game plan. A blanket rejection of technology is not a strategy; it's a surrender. The most robust therapist opinion on AI therapy apps is one that moves from fear to function, integrating these tools ethically and effectively.

The future is not AI vs. Human; it's a hybrid, blended care model. The goal is to leverage AI for what it does well—data tracking and skill reinforcement—while reserving the core therapeutic work for the human professional. Here is the move for ethically recommending apps to clients:

Step 1: The Vetting Process.

Never recommend an app you haven't thoroughly vetted yourself. Scrutinize its privacy policy. Test its crisis intervention protocols. Understand its theoretical modality (e.g., CBT, DBT). You are extending your professional credibility, so do your due diligence.

Step 2: The Framing Script.

When introducing an app, frame it correctly. Pavo would script it like this: "I want to be clear: this app is not a replacement for our work together. Think of it as a workbook or a journal. We can use it between our sessions to help you practice the mindfulness skills we discussed. We will review the patterns it tracks together, in here, where the real work happens."

Step 3: The Integration Loop.

Make the app's output part of the therapy. Use the mood logs as a jumping-off point for discussion. Analyze the thought records from the CBT exercises together. This transforms the app from a potential distraction into a structured part of your treatment plan, reinforcing that you are the primary provider of care. This is the forward-thinking therapist opinion on AI therapy apps that serves both client and clinician.

FAQ

1. What are the biggest ethical concerns with AI therapy apps for professionals?

The primary ethical concerns of AI therapy include client data privacy and security, the inability to manage acute crises like suicidal ideation, the risk of AI self-diagnosis without clinical oversight, and the lack of a genuine therapeutic alliance, which is crucial for effective treatment.

2. Can a therapy companion truly replace a human therapist?

No. While a therapy companion can offer support, skill-building exercises, and 24/7 accessibility, it cannot replace a human therapist. It lacks the capacity for genuine empathy, nuanced clinical judgment, and the ability to form a deep therapeutic relationship, which are core components of effective psychotherapy.

3. How can a therapist ethically start recommending apps to clients?

Therapists can recommend apps by first vetting them for privacy and clinical soundness, clearly framing the app as a supplementary tool (not a replacement for therapy), and integrating the app's data and exercises into their actual therapy sessions to maintain professional oversight.

4. What is a 'blended care model' in psychology?

A blended care model combines traditional, face-to-face or telehealth therapy with digital tools and technology. For example, a client might see their therapist weekly while using a prescribed app for daily mood tracking or CBT homework, creating a more continuous and integrated care experience.

References

apa.org — Ethical guidelines for using technology in mental health care