The Late-Night Confession and the Digital Void

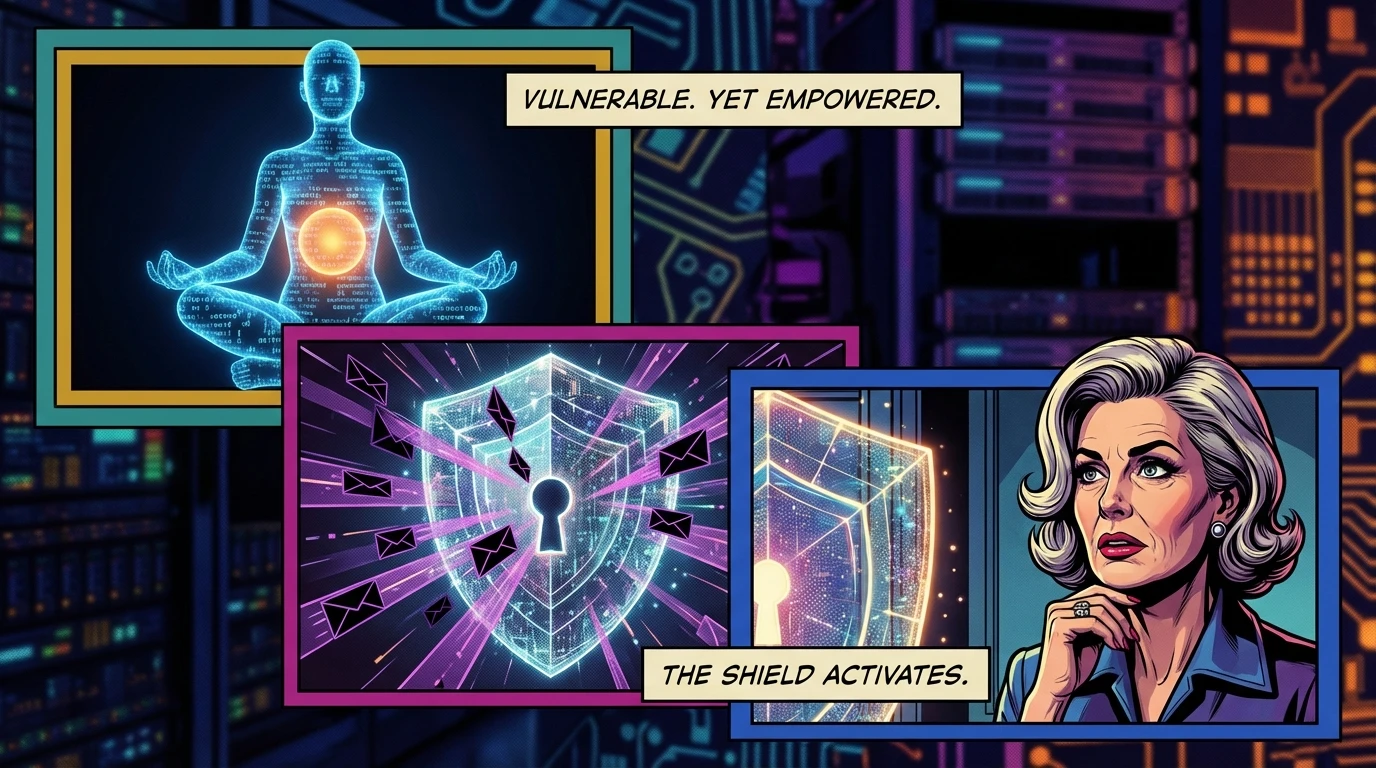

It’s 2 AM. The house is quiet, and the blue glow of your phone is the only light. You type out a fear you’ve never said aloud, a raw confession into the chat window of a new mental health app. It feels like a relief, a weight lifted. The chatbot responds with something perfectly empathetic.

But then, a cold thought trickles in. Where did that confession go? Is it sitting on a server somewhere? Who has the key? This isn't just a fleeting thought; it's a core issue of ai therapy data privacy concerns that everyone using these platforms must confront. You sought a sanctuary and are now left wondering if you just whispered your secrets into a corporate surveillance system.

The Vulnerability Hangover: Sharing Your Soul With a Corporation

Let’s just name that feeling: it’s a vulnerability hangover. And Buddy is here to tell you that it's completely, utterly valid. That knot in your stomach when you think about mental health app data collection isn’t paranoia; it's your intuition screaming that your privacy matters.

You did something incredibly brave by seeking support. That impulse to heal is a testament to your character, not a sign of naivety. The crucial issue is that the digital wellness industry often blurs the line between care and commerce. Your deepest anxieties can become data points, as many have discovered. The fundamental question, 'do therapy apps sell your data?' is not just a technical query, but an emotional one that touches on trust and safety.

As our emotional anchor Buddy reminds us, “That feeling of unease is your self-preservation instinct. It's not cynical; it's wise.” You deserve to know the rules of the room before you bare your soul. Understanding the reality of ai therapy data privacy concerns is the first step to reclaiming your power in this new landscape.

Decoding the Fine Print: What 'Secure' and 'Compliant' Really Mean

This is where we need to switch from feeling to analysis. As our sense-maker Cory would say, 'Let's look at the underlying pattern here.' The language used by tech companies is designed to be soothingly vague. Terms like 'secure mental health chatbots' and 'HIPAA compliant AI therapy' are thrown around, but their real-world application can be murky.

According to a report from Wired, many popular apps have alarmingly poor privacy practices. The ethics of AI in mental health are complex. For instance, HIPAA, the American health data privacy law, was designed for hospitals and clinics, not necessarily for direct-to-consumer apps. Some apps may be HIPAA compliant in how they store data, but their privacy policy might still allow them to share 'anonymized' or 'aggregated' data with third-party advertisers. These ai therapy data privacy concerns are central to the debate.

Anonymized data sounds safe, but de-anonymization is a growing technological reality. Cory’s perspective is clear: we must investigate the system, not just the sales pitch. Debates, like those surrounding apps like Sonia, often highlight a business model that can feel predatory when vulnerable users are the product. This leads us to the critical need for addressing these ai therapy data privacy concerns proactively.

Cory offers this permission slip: “You have permission to demand absolute clarity on how your data is used. Your peace of mind is not a commodity.” The challenge of ai therapy data privacy concerns requires us to be informed consumers.

A 3-Step Privacy Check Before You Hit 'Download'

Feeling empowered is about moving from awareness to action. Our social strategist, Pavo, treats digital safety as a game of chess where you must protect your king. "Don't get played," she'd advise. "Audit their strategy before you reveal yours." Here is the move to mitigate ai therapy data privacy concerns.

Before you download any mental health app, execute this simple, three-step privacy check. This is your personal shield against the most pressing ai therapy data privacy concerns.

Step 1: Audit the Business Model.

Ask one question: How does this app make money? If it's a one-time fee or a clear subscription, that's a good sign. If it's free, be skeptical. A common saying in tech is, "If you're not paying for the product, you are the product." Your data is the currency. Reading a privacy policy for apps is non-negotiable when the service is free. This is a primary source of ai therapy data privacy concerns.

Step 2: Find the 'Delete My Data' Button.

Before you even sign up, search their website or FAQ for instructions on data deletion. Is it a single, easy-to-find button? Or is it a convoluted process requiring multiple emails? A company that respects your privacy makes the exit as clear as the entrance. Difficulty in this area is a major red flag for ai therapy data privacy concerns.

Step 3: Scan the Privacy Policy with Keywords.

You don't need a law degree. Open the privacy policy and use your browser's find function (Ctrl+F or Cmd+F) to search for these terms: "third party," "affiliates," "marketing," "advertising," and "anonymized." The presence of these words tells you exactly how your data might be shared. This tactical approach to ai therapy data privacy concerns can save you significant future anxiety. Pavo insists this isn't optional; it's essential self-defense in a digital world. Ignoring these ai therapy data privacy concerns is a risk not worth taking.

FAQ

1. What are the main privacy risks of using an AI therapy app?

The primary risks involve your sensitive personal data being shared with or sold to third parties for marketing, being used to train AI models without your explicit consent, and the potential for data breaches. The core of ai therapy data privacy concerns is the lack of federal regulation specifically governing these apps.

2. Is any AI therapy app truly anonymous?

True anonymity is extremely difficult to achieve. While some services offer 'anonymous AI therapy' by not requiring a real name, they still collect your IP address, device information, and the content of your conversations. This data can potentially be de-anonymized. Always read the privacy policy to understand what 'anonymous' means to them.

3. How can I tell if a mental health app is selling my data?

Look for specific language in their privacy policy. Phrases like 'sharing data with marketing partners,' 'third-party affiliates,' or 'for business purposes' are red flags. A transparent company will explicitly state that they do not sell user data. The most significant of ai therapy data privacy concerns is the monetization of user vulnerabilities.

4. What's the difference between a HIPAA-compliant app and a truly private one?

HIPAA sets a baseline for protecting health information, primarily governing how data is stored and transmitted securely. However, it doesn't necessarily stop a company from using your data in ways outlined in their privacy policy, which you agree to. A truly private app would have a strict policy against sharing or selling data for any purpose, going beyond the minimum requirements of HIPAA.

References

wired.com — Most Mental Health Apps Are a Data Privacy Nightmare

reddit.com — Example of Predatory SaaS (Sonia AI)