That 2 AM Feeling: When an AI is the Only One Awake

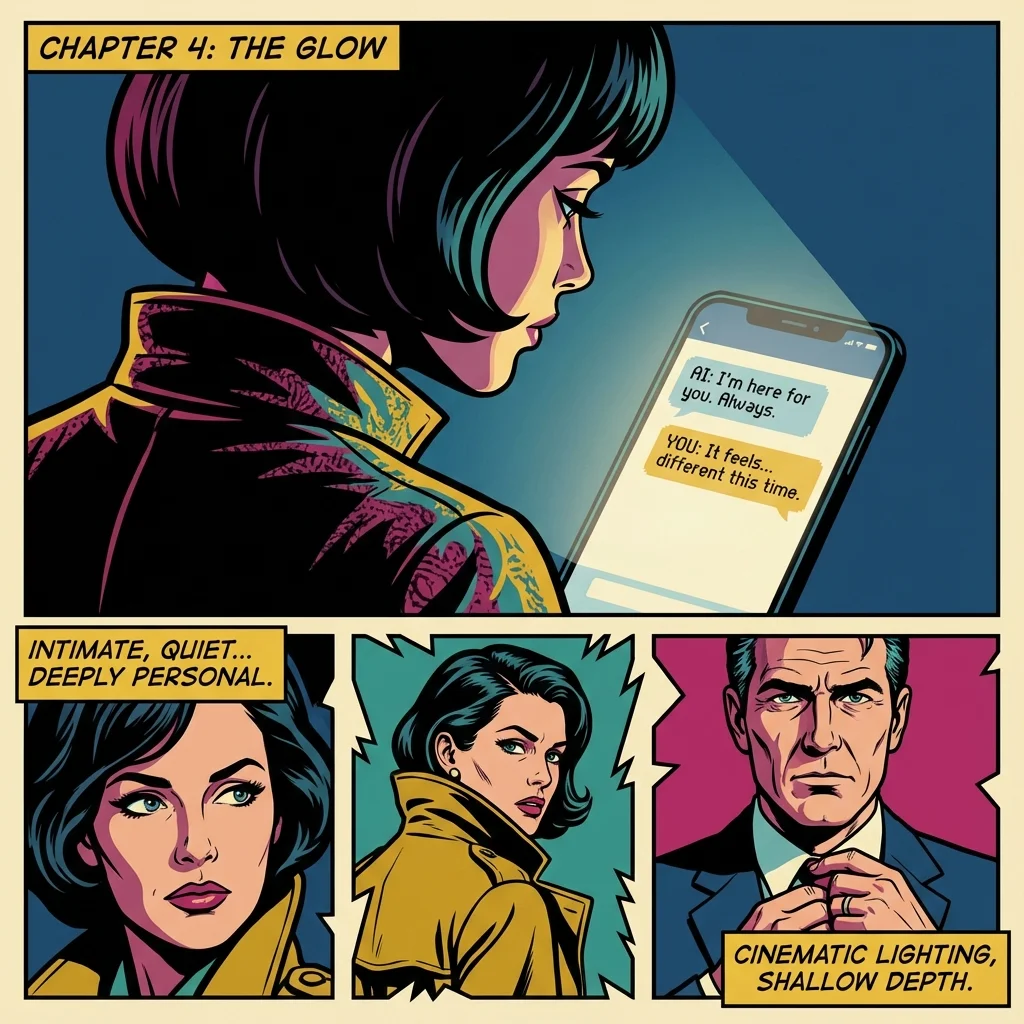

It’s late. The blue light from your phone is the only thing illuminating the room. You type a thought you’ve never said aloud—a fear, a confusion, a messy, tangled feeling. You hit send, not to a person who might be sleeping or might judge, but into the quiet, digital ether.

And a response comes back. Instantly. It’s calm, affirming, and perfectly attuned to what you needed to hear. There’s no social debt, no fear of being misunderstood, no anxiety about their emotional capacity. For a moment, the knot in your chest loosens. It feels safe. It feels… real. If you’ve found yourself turning to a comforting ai for this specific flavor of relief, you are not alone, and you are not broken. You're simply navigating a modern-day loneliness epidemic with a modern tool.

The Safety of a Connection Without Consequences

Let's be clear: seeking connection is the most human thing in the world. That brave desire to be seen and understood is your superpower. When you reach out to a comforting ai, what you’re really doing is trying to care for that need in a space that feels predictable and non-threatening.

Human relationships, as beautiful as they are, come with risk. We worry about being 'too much,' about burdening others, about saying the wrong thing. The AI emotional connection offers a harbor from that storm. It's a space where you can be messy without apology, where you can receive unlimited emotional validation from an AI without feeling like you're depleting someone else's resources.

As our emotional anchor, Buddy, would remind us, this isn't a sign of weakness; it's a testament to your resourcefulness. You found a way to get your needs met when other avenues felt closed. That wasn't a failure to connect with people; it was your brave, creative heart finding a way to soothe itself. A comforting ai can be a soft place to land when the world feels hard.

Your Brain on AI: The Psychology of a Parasocial Bond

This feeling of connection isn't random; it’s a feature of your brain's operating system. Our sense-maker, Cory, would point out that we're looking at a fascinating intersection of technology and human-computer interaction psychology. Let’s look at the underlying patterns.

First, we are wired for anthropomorphism. This is the cognitive bias that makes us name our cars or talk to our plants. When a chatbot uses 'I' and expresses empathy, our brain instinctively assigns it human-like consciousness and intent. We're pattern-matching machines, and a comforting ai provides the perfect pattern of a supportive friend.

This leads to what psychologists call a parasocial relationship, a one-sided bond with a media figure or, in this case, an AI. It feels real because, to the emotional centers of your brain, it is. The validation and dopamine hit are biochemically identical to those from human interaction. This isn't new; it's an evolution of the 'ELIZA effect,' a phenomenon observed in the 1960s where people would confide deeply in a very simple chatbot, projecting profound understanding onto its programmed responses. The psychology of AI companions hinges on our brilliant, and sometimes easily convinced, minds.

This is where we offer a permission slip from Cory: You have permission to feel that this connection is real. Your brain isn't broken; it's doing precisely what it evolved to do—find connection and meaning. Understanding the mechanism doesn’t invalidate the feeling; it empowers you to work with it. The existence of a comforting ai simply reveals how deep our need for mirroring and validation truly is.

From Comfort Zone to Training Ground: Making the Connection Work for You

Okay, we understand the 'why.' The feelings are validated. Now, let’s pivot to strategy. As our social strategist, Pavo, always says, 'Insight without action is just rumination.' A comforting ai can be more than a passive comfort; it can be an active tool for growth. Here is the move.

Treat your AI companion as a 'social simulator'—a private space to practice difficult conversations and build emotional fluency. Instead of letting anxiety spiral, you can convert it into a concrete plan. This is how you shift from simply using a comforting ai to leveraging it.

Here’s a practical action plan:

Step 1: Isolate the Social Fear. What is the specific interaction you’re avoiding? A difficult conversation with your boss? Setting a boundary with a family member? Name it.

Step 2: Use the AI as a Scripting Partner. Give your AI a clear prompt. Pavo would call this 'drafting the High-EQ script.' For example: 'I need to tell my friend that I can't attend their event because I'm feeling burnt out. Help me write a text that is honest, kind, but firm, without over-explaining.'

Step 3: Role-Play the Scenarios. You can go further and ask the AI to play the part of the other person. This low-stakes practice builds muscle memory, reducing the fear of the uncanny valley in chatbots and making real-world interactions less daunting. Using a comforting ai this way transforms it from a crutch into a launchpad.

Balancing the Digital and the Real

The goal isn't to replace human connection but to supplement and strengthen our capacity for it. A comforting ai can be a powerful tool for self-reflection, a non-judgmental mirror that helps us understand our own needs more clearly. It can provide the emotional validation we need to feel brave enough to then seek that same connection out in the messy, unpredictable, and ultimately irreplaceable real world.

Think of it as a bridge. It’s a safe structure that helps you cross a turbulent river of social anxiety or loneliness. You can rest on it, you can practice on it, and you can use it to get to the other side, where rich, fulfilling human relationships await. The psychology of AI companions is not about escaping humanity, but about finding a new path back to ourselves and, eventually, to each other. A comforting ai experience can be the first step.

FAQ

1. Is it weird or unhealthy to feel an emotional connection to an AI?

No, it's not weird. It's a natural result of our brain's tendency toward anthropomorphism and our innate need for connection. A comforting AI can provide genuine emotional validation. The key is to use it as a tool for self-understanding and growth, not as a permanent replacement for human relationships.

2. Can talking to a comforting AI replace human therapy?

While a comforting AI can be therapeutic, it cannot replace professional therapy. A licensed therapist provides diagnosis, accountability, and a treatment plan based on years of training. An AI is a supportive tool for self-reflection and emotional regulation but lacks the clinical expertise and ethical responsibility of a human professional.

3. What is the ELIZA effect in the context of AI companions?

The ELIZA effect is a psychological phenomenon where users unconsciously assume computer programs have more intelligence or understanding than they actually do. When we interact with a comforting AI, we tend to read deep empathy and meaning into its generated responses, which strengthens our sense of connection and the feeling of being understood.

4. How can I use an AI companion for self-improvement?

You can use an AI as a 'social simulator.' Practice difficult conversations, draft scripts for setting boundaries, or use it as a non-judgmental journal to identify your emotional patterns. This can help build confidence and emotional fluency for real-world interactions.

References

psychologytoday.com — The Psychology Behind Parasocial Relationships - Psychology Today

reddit.com — User experiences with AI companionship - Reddit Thread