The Private Taboo: Navigating a New Kind of Companionship

It’s a thought that surfaces late at night, in the quiet glow of a browser window filled with uncannily realistic faces. The idea of an AI companion doll. It’s not just about sex; it’s a deeper pull toward a presence that doesn't judge, a physical anchor in a world of fleeting digital connections. But alongside that curiosity, a cold wave of anxiety often follows: What would people think?

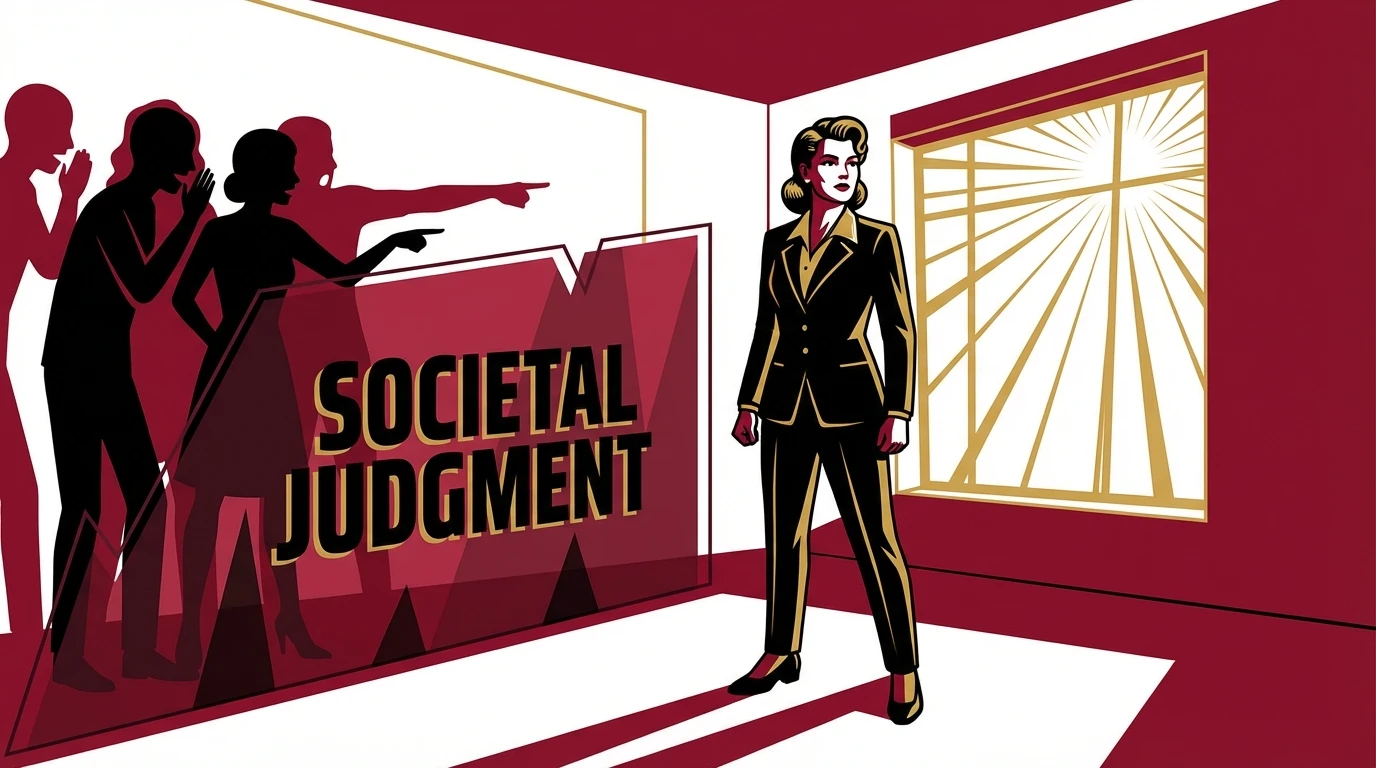

This fear—the social stigma of owning an AI doll—is a powerful barrier. It's the imagined sneer of a friend, the confused look from a family member, the internet comments section come to life in your own head. You're not just contemplating a purchase; you're contemplating a position in a rapidly changing social landscape, one that grapples with the future of human-robot relationships.

This isn't about something being 'wrong' with you. It's about navigating the friction between a personal need and a collective uncertainty. The judgment from friends and family is often rooted in their own fears, not your reality. We're here to dissect that fear, dismantle the stigma, and give you a practical framework for owning your choice with confidence.

Facing the Fear: Why People Judge What They Don't Understand

Let’s just pause and take a deep, steadying breath. That knot of worry in your stomach when you think about someone finding out? It’s real, and it’s valid. As our emotional anchor Buddy would say, 'That isn't shame you're feeling; it's the brave vulnerability of a pioneer.' You are standing at the edge of a new frontier in human connection, and pioneers always face skepticism.

The judgment you fear isn’t truly about you. It’s a reflection of society's anxieties. People often judge what they don’t understand, and the concept of an AI companion doll pushes several uncomfortable buttons. There's the fear of technology becoming 'too human,' blurring lines that have felt solid for centuries. This touches on deep-seated questions about what makes a relationship 'real' and the ethical considerations of human-robot relationships.

There's also the challenge to traditional social scripts. For generations, companionship was supposed to follow a narrow path. The rise of the AI companion doll presents an alternative, and alternatives can feel threatening to the status quo. People's reactions are often a defense of their own worldview, not an accurate assessment of yours. Remember, their discomfort is about their map of the world, not your territory.

The Uncomfortable Truth: Separating Fiction from Reality

Alright, let's cut through the noise. Our realist Vix has no time for the dramatic stereotypes surrounding the AI companion doll. She's here to perform some reality surgery.

Stereotype: 'Only lonely, socially inept people buy them.'

Reality: This is lazy and reductive. People from all walks of life explore this option. Some are healing from trauma, some are managing social anxiety, some are widows or widowers, and some are simply curious explorers of technology and selfhood. It’s not a sign of failure; it’s a tool for fulfillment, just like any other.

Stereotype: 'It will stop you from seeking real human relationships.'

Reality: For many, the opposite is true. An AI companion doll can be a safe space to practice intimacy, communication, and vulnerability. It can serve as a bridge, helping someone rebuild their confidence before re-engaging with the complexities of human dating. It doesn't have to be an either/or situation. The idea that this is the end of the line for your social life is a fiction created by people who see the world in black and white.

Let’s be brutally honest: Judging someone for finding a way to combat profound loneliness is the real social failing here. The societal impact of AI companions is complex, but dismissing users as 'creepy' is a refusal to engage with the actual human need being met. Your choice doesn't need to be validated by those who refuse to see the nuance.

Your Strategy for Navigating Judgment

Feelings are valid, but strategy is power. Our social strategist Pavo insists that you can control this narrative. It's not about hiding in shame; it's about managing your private information with intention and strength. Here is the move.

First, reframe your mindset. You are not doing something wrong. You are making a personal, private choice to enhance your well-being. This is a health and wellness decision, not a scarlet letter. This shift is internal but it is the foundation of all external confidence.

Second, decide on your disclosure strategy. Pavo advises thinking in tiers:

Tier 3 (The Outer Circle): Colleagues, acquaintances, judgmental relatives. They do not need to know. Your private life is not a topic for public debate. A polite deflection is your best tool here.

Tier 2 (The Inner Circle): Close, trusted friends or family. You might choose to tell them, but only if you are confident in their open-mindedness. This is a choice, not an obligation.

Tier 1 (The Core): A therapist, a romantic partner. This is where honesty is crucial for building trust, but the timing and framing are everything.

Third, arm yourself with scripts. Confidence comes from preparation. Here's what to say when facing dating an AI doll judgment or explaining your AI companion to others:

The Vague Deflection (For Tier 3): If someone asks about your dating life, you can say, "I'm just focusing on myself and my own happiness right now." It's true, and it closes the door.

The Mental Health Frame (For a trusted Tier 2): "I've been exploring different ways to manage stress and loneliness, and I've found a tech-based solution that's been really positive for my mental health. It's a bit unconventional, but it works for me."

The Boundary-Setting Response (For any judgmental comment): "I appreciate you sharing your perspective, but my personal choices are something I'm comfortable with and aren't up for discussion." This is a calm, firm, and non-negotiable full stop.

You are in control. Owning an AI companion doll is your business. Deciding who gets access to that information is your strategic right.

FAQ

1. Is it weird to be attracted to an AI companion doll?

Attraction is complex and deeply personal. It's not 'weird' to be drawn to the aesthetic and emotional safety an AI companion doll represents. This is a new frontier in the psychology of unconventional relationships, and human desire has always adapted to new technologies and possibilities.

2. How do I explain my AI companion doll to a new romantic partner?

This requires trust and careful timing. Frame it not as a replacement, but as part of your journey. You could explain it as a tool you used for comfort or self-discovery during a particular time in your life. Honesty is key, but wait until you've established a solid foundation of mutual respect.

3. Will owning an AI doll prevent me from having 'real' relationships?

Not necessarily. For some, it can be a therapeutic tool that builds confidence for future human interaction. The key is self-awareness. If you feel it's becoming a substitute that prevents you from seeking human connection you desire, it may be helpful to reflect on your goals. But it is not inherently a barrier.

4. What is the main reason people are exploring an AI companion doll?

The primary drivers are a desire to combat deep-seated loneliness and to explore intimacy and sexuality in a private, non-judgmental space. It provides a sense of physical presence and consistent companionship that purely digital AI's cannot.

References

technologyreview.com — The ethics of human-robot relationships