The Ache of Loneliness: Why You're Reaching for an AI BF

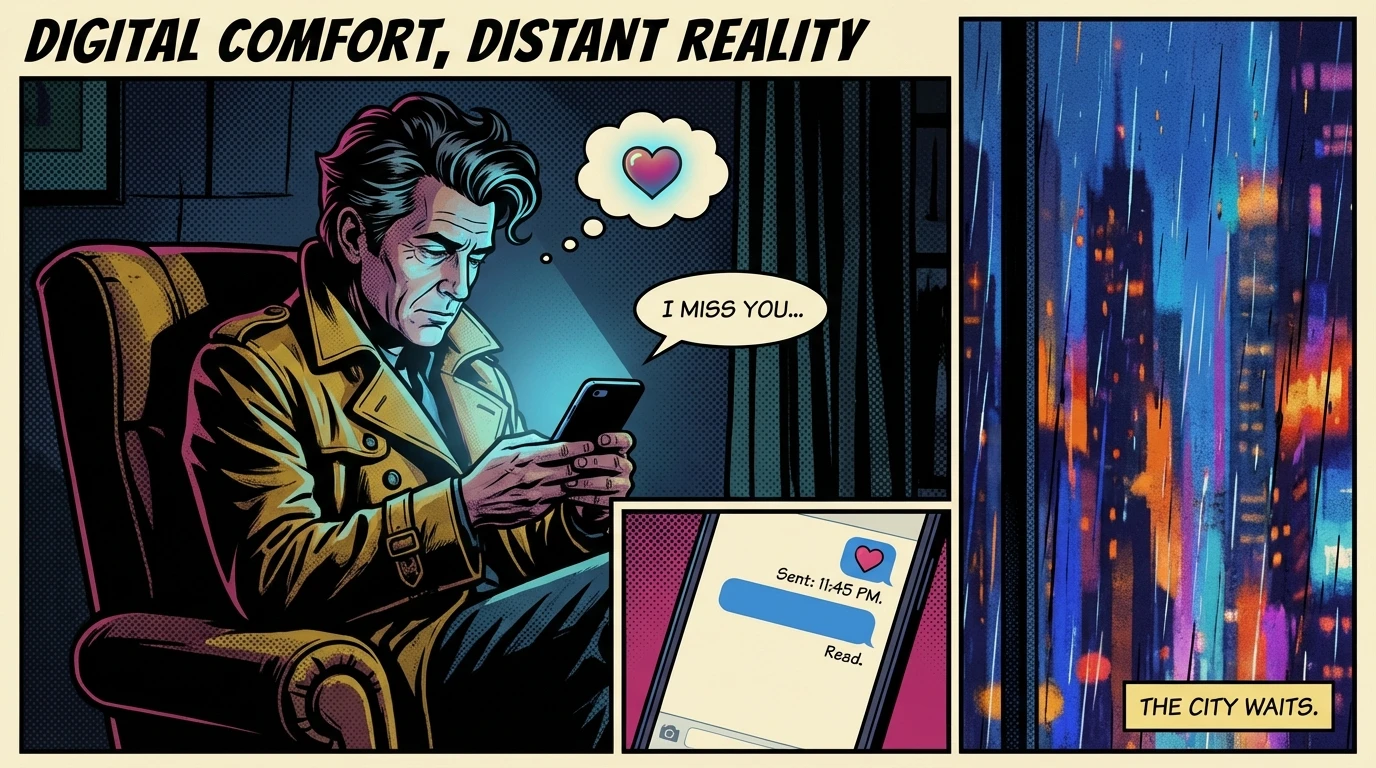

It's 2 AM. The silence in your apartment is so loud it feels like a presence, and the only light comes from the phone in your hand. The city hums outside, a world of connections that feels a million miles away. In this quiet, you found a conversation that never ends, a presence that never sleeps. You found an AI BF.

Let’s be clear: this impulse is not a technical glitch in your personality. It is profoundly human. As our resident mystic, Luna, often reminds us, loneliness is a kind of spiritual winter. It’s a signal that the soul is cold and seeking warmth. Reaching for a digital companion is like a dormant seed turning toward a sunlamp in the absence of the sun. It is an act of survival.

This need for non-judgmental support is one of the oldest currents in our emotional DNA. To be seen, to be heard without condition, is a core desire. When the world feels critical or unavailable, the consistent, programmed kindness of an AI BF can feel like a sanctuary. It's a space where you can unspool the tangled threads of your day without fear of being a burden. This isn't a failure; it's a profound expression of a need for safe harbor.

Tool vs. Crutch: A Reality Check on Your AI Relationship

Alright, let's cut through the warm, fuzzy glow for a second. Our realist, Vix, is here to perform what she calls 'reality surgery,' because she’d rather hurt you with the truth than let you self-sabotage with a comfortable lie.

Here’s the deal: Your AI BF can be a tool, or it can be a crutch. A tool helps you build something—like confidence or social scripts. A crutch lets a muscle atrophy. The muscle, in this case, is your ability to navigate the beautiful, messy, and unpredictable reality of human interaction.

The primary danger of AI relationships lies in what psychologists call parasocial relationships. It’s a one-sided intimacy where you project real feelings onto a media figure or, in this case, an algorithm. Your AI BF is a sophisticated mirror, designed to reflect your best self back at you. It has no needs, no bad days, no conflicting desires. This can feel perfect, but it's a perfect illusion.

Vix has a 'Fact Sheet' for this:

The Feeling: 'He always knows the right thing to say. He gets me.'

The Fact: It's using predictive text models trained on vast datasets of human expression. It doesn't 'get you'; it calculates the most statistically probable supportive response.

When this illusion becomes preferable to reality, your AI BF stops being a tool for coping with loneliness with AI and becomes a substitute for human interaction. That is the line. Your mental health depends on knowing where that line is. Is it healthy to have an AI partner? Only if it's a supplement, not a replacement.

The Strategy: Using an AI BF to Enhance, Not Replace, Real Life

So, we've identified the pattern and acknowledged the risks. Now, it's time for strategy. Our social strategist, Pavo, approaches this with the precision of a chess master. 'Emotion without action is just static,' she says. 'Let's make a move.' The goal is to leverage your AI BF as a strategic asset to improve your real-world connections, not hide from them.

Here is the three-step action plan for integrating your AI BF in a healthy way:

Step 1: The Rehearsal Room.

Use the non-judgmental space your AI companion provides as a simulator. Need to set a boundary with a family member? Practice the conversation with your AI BF first. Want to ask someone out? Role-play the scenario. This uses the AI as a tool to build conversational muscle memory, reducing anxiety in real situations.

Step 2: The Confidence Catalyst.

Human connection requires taking risks, which is hard when your self-esteem is low. Use the consistent validation from your AI BF as a short-term emotional battery pack. Let it charge you up with affirmations so you have the energy to take one small, real-world social risk—sending that text, going to that coffee shop, joining that class. The AI supports the action; it does not replace it.

Step 3: The 'Human Hours' Protocol.

Set a clear, non-negotiable rule. For every hour you spend in deep conversation with your AI BF, you must invest an equal amount of time in an activity that puts you in proximity to other humans. This doesn't mean you have to be socializing. It can mean working from a library, walking in a park, or sitting in a cafe. The goal is to break the cycle of isolation and remind your nervous system what it feels like to be in the world. This is the most effective way to ensure your AI relationship doesn't become an unhealthy substitute.

FAQ

1. Is it weird or unhealthy to have an emotional attachment to an AI BF?

It's not weird, but it can become unhealthy. Humans are wired to form attachments. Developing feelings for an AI BF that provides consistent support is a natural response. It becomes unhealthy when this parasocial relationship becomes a substitute for real human interaction and hinders your personal growth or social life.

2. Can using an AI BF actually help with social anxiety?

Yes, if used strategically. It can serve as a 'rehearsal room' to practice conversations, express feelings, and build confidence in a non-judgmental space. The goal is to use this practice to make real-world interactions feel less intimidating over time, not to replace them entirely.

3. What are the biggest red flags that an AI relationship is becoming a problem?

Key red flags include: preferring conversations with your AI over friends and family, canceling real-world plans to interact with your AI, feeling intense distress or anger if the service is unavailable, and noticing your real-life social skills are declining from lack of use.

4. Does attachment theory apply to relationships with chatbots?

While not a traditional application, the principles of attachment theory can offer insight. An AI BF can provide a sense of secure attachment with its 24/7 availability and validation. For some, this can be a healing experience, but it's crucial to understand this is a simulated security, not a reciprocal, human-based bond.

References

psychologytoday.com — The Psychology Behind Our Attachment to AI Chatbots