The Doubt is Real: 'Can a Robot Really Understand Me?'

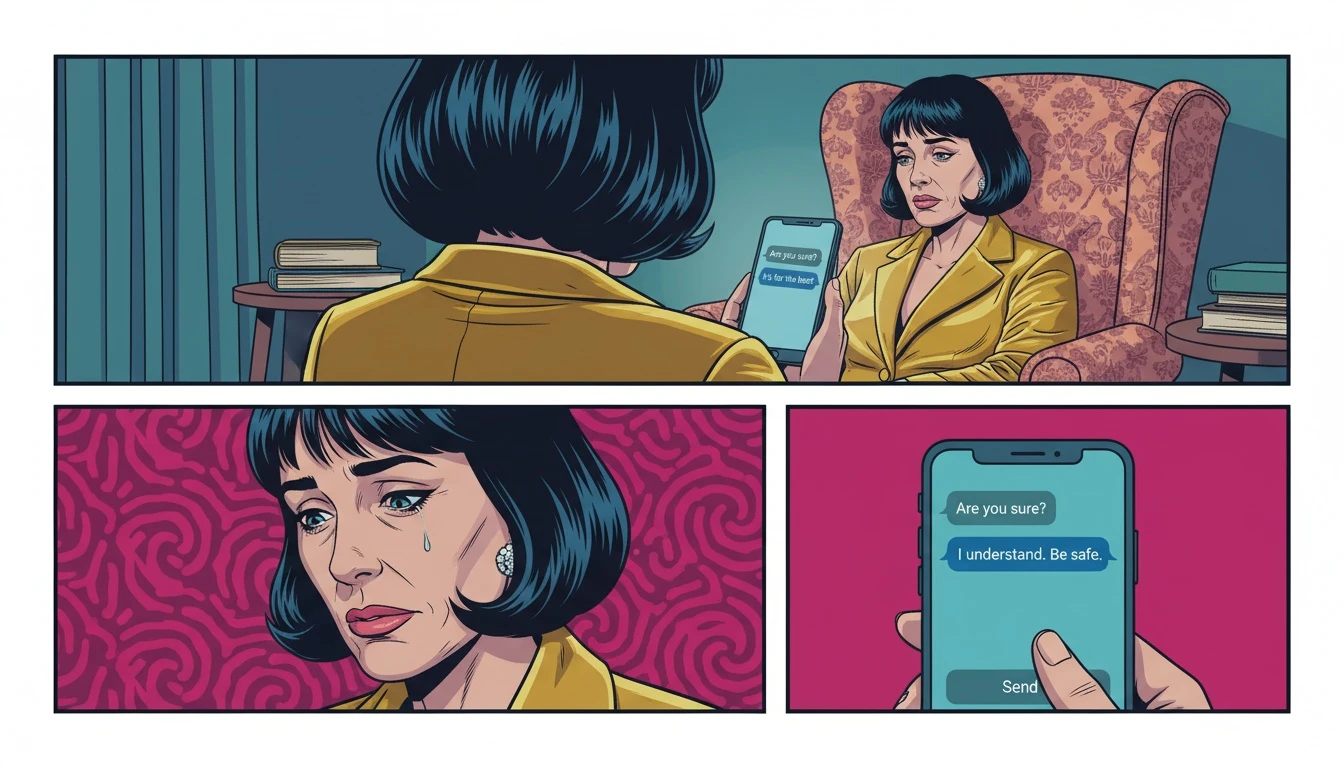

It’s 2 AM. The house is quiet, and the only light is the blue glow from your phone. You’ve just typed something raw and vulnerable into a chat window—a fear you haven’t spoken aloud, a knot of anxiety that keeps you from sleeping. And the response waiting on the other side isn't from a person, but from an algorithm. The hesitation you feel in that moment is completely valid.

Our emotional support expert, Buddy, puts it this way: “That feeling isn't a rejection of technology; it’s your brave, human desire for genuine connection.” It’s a protective instinct. We are wired to seek empathy in another person’s eyes, to find safety in a shared silence, to feel understood by a living, breathing being who has also known pain. Questioning 'are AI therapists helpful?' is not cynicism; it's a testament to how deeply you value real, human-centered care.

So let's start by honoring that feeling. The question of whether a `therapy ai bot` can truly help is complex, and your skepticism is the most important part of this conversation. It’s the gatekeeper of your vulnerability, and it deserves a thoughtful, evidence-based answer, not just a sales pitch.

Beyond the Hype: What the Clinical Studies Reveal

As our analyst Cory would say, “Let’s look at the underlying pattern here. This isn’t about choosing between human or machine; it’s about understanding the right tool for the right task.” The skepticism is real, but so is the emerging data on the effectiveness of AI therapy chatbots.

One of the most significant areas where a `therapy ai bot` shows promise is in delivering structured psychotherapeutic techniques, like Cognitive Behavioral Therapy (CBT). A landmark Stanford Medicine study on the chatbot Woebot found that users experienced a significant reduction in symptoms of depression and anxiety. These weren't just feelings; this was a quantifiable `symptom reduction measurement` observed over the course of the study.

The reason for this success lies in the technology. Using sophisticated `natural language processing`, these bots can guide users through standardized CBT exercises consistently and on-demand. `Randomized controlled trials` are increasingly showing that for specific, measurable goals—like challenging negative thought patterns or managing anxiety—these tools have a measurable `chatbot therapy efficacy`.

However, this is where we must be precise. These `AI therapy scientific studies` don’t suggest the bot is 'feeling' empathy. Instead, they indicate that a simulated `therapeutic alliance with AI` can be formed, where the user feels safe enough to engage with the material. The bot’s non-judgmental, 24/7 availability removes barriers like shame or scheduling conflicts that often stop people from seeking help. A well-designed `therapy ai bot` is not a replacement for human depth, but a powerful instrument for accessibility and consistency.

Cory’s core insight here is a permission slip: "You have permission to use a tool that helps you, even if it doesn't solve everything." A `therapy ai bot` doesn't need to replicate a human soul to reduce your suffering.

Making an Informed Choice: Is AI Therapy Right for Your Situation?

Now that we have the data, it's time to build a strategy. Our pragmatist, Pavo, always advises, “Feelings tell you where you are. Strategy tells you where to go next. Let’s map this out.” Making an informed choice about using a `therapy ai bot` isn't about a simple yes or no; it’s about aligning its proven strengths with your specific needs.

Here’s a strategic framework for deciding if the `effectiveness of AI therapy chatbots` applies to you:

Consider a `therapy ai bot` if: Your primary goal is to learn and practice structured skills like CBT or mindfulness. If you're struggling with anxiety, stress, or mild to moderate depression and need immediate, accessible tools to manage your thoughts and feelings, this is where AI excels. It’s a 24/7 skills coach in your pocket.

Prioritize a human therapist if: You need to process complex trauma, explore deep-seated relational patterns, or are dealing with severe mental health conditions. The nuanced, intuitive, and deeply relational work of healing attachment wounds or navigating profound grief requires the presence and attuned empathy of a trained human professional. A bot cannot provide that container.

Use them in tandem if: You are in traditional therapy and want to supplement your sessions. A `therapy ai bot` can be an excellent way to practice the skills your therapist recommends between appointments. Pavo suggests this script to bring it up with your therapist:

"I'm finding our sessions incredibly helpful, and I want to make sure I'm practicing the techniques we discuss. I was thinking of using an app like Woebot to work on CBT exercises between our appointments. I'd love to get your thoughts on how I can use it to support the work we’re doing here."

Ultimately, the smartest move is to see AI therapy not as a replacement, but as a potential component of your broader mental wellness toolkit.

FAQ

1. Can a therapy AI bot diagnose me with a mental health condition?

No, absolutely not. A therapy AI bot is a tool for support and skill-building, not a diagnostic medical device. It can help you track symptoms and learn coping mechanisms, but a formal diagnosis must come from a qualified healthcare professional like a psychiatrist or psychologist.

2. Is my data safe and private when using a therapy AI chatbot?

This is a critical concern. Reputable AI therapy apps have privacy policies and often anonymize data, but the level of security can vary. It's essential to read the privacy policy of any app you use. Unlike therapy with a licensed professional, conversations with a bot may not be protected by the same legal standards (like HIPAA in the U.S.).

3. How does a therapy ai bot compare to seeing a human therapist?

They serve different, though sometimes overlapping, purposes. An AI bot is excellent for providing accessible, on-demand, and structured psychological exercises (like CBT). A human therapist provides a deep, dynamic, and empathetic relationship that can facilitate profound healing, process complex trauma, and offer nuanced insight that an algorithm cannot.

4. What is the main limitation of a therapy ai bot?

The primary limitation is the absence of genuine consciousness, empathy, and life experience. An AI cannot truly understand the nuances of the human condition. It operates on patterns in data, not on felt experience. For issues rooted in relational trauma, attachment, or existential crises, the bot's inability to form a real human connection is a significant drawback.

References

med.stanford.edu — A chatbot for mental health, Woebot, is put to the test - Stanford Medicine