That Nagging Fear: Where Does My Data Go?

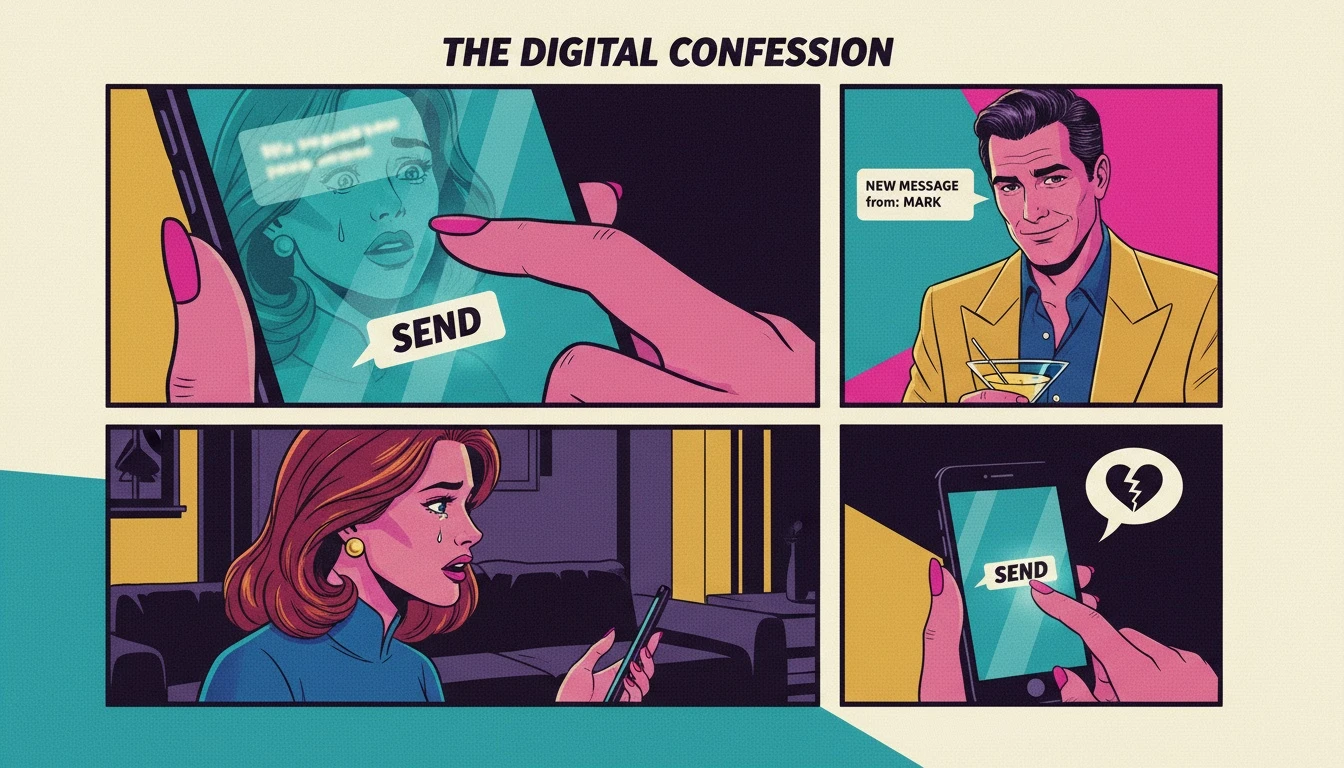

It’s late. The house is quiet, and the blue glow of your phone is the only light. You’ve just typed something deeply personal into a chat window—a fear you’ve never said aloud, a memory that still stings. You’re talking to an `ai therapist`, and for a moment, you feel a sense of release. Then, a cold knot tightens in your stomach.

Your thumb hovers over the send button. Where does this go? Who sees this? Is this space truly safe, or am I just whispering my secrets into a microphone connected to a boardroom?

Let’s just pause and take a breath here. Our emotional anchor, Buddy, would want you to know this first: That fear you feel isn't paranoia; it's wisdom. It’s your protective instinct kicking in, reminding you that vulnerability requires a foundation of trust. You are right to question where your data goes. You are smart to be cautious.

The promise of an `ai therapist` is incredible—an affordable, 24/7, judgment-free zone to unpack your thoughts. But that promise is only as good as its commitment to your privacy. Your search for answers about `ai therapy data privacy` is not just a technical question; it's about ensuring your sanctuary is secure.

The Hard Truth About Mental Health App Data

Alright, let's cut through the marketing fluff. Our realist, Vix, believes you deserve the unvarnished truth, because a clear view of the risks is the only way to truly protect yourself.

First, let's talk about the big one: HIPAA. You've heard the term `hipaa compliant ai` thrown around, but here's the reality check: Most consumer-facing mental health apps are not bound by HIPAA. As the American Psychological Association notes, HIPAA rules typically apply to healthcare providers and their business associates, not necessarily the wellness app you downloaded from the app store. An app saying it's 'secure' is not the same as it being legally bound by healthcare privacy laws.

Next up: `data encryption` versus `anonymized user data`. They are not the same thing. Encryption is like putting your diary in a locked safe; it scrambles the data so it's unreadable if stolen. Anonymization is like taking your name off the diary's cover. It’s supposed to strip away personal identifiers, but it's not foolproof. Sometimes, data can be re-identified.

And now for the coldest truth. The reason so many `ai therapist` apps are cheap or free? You might not be the customer; you might be the product. `Selling data to third parties`—advertisers, research firms—is a legitimate business model. Buried deep in the `terms of service explained` section you skipped is likely a clause that allows them to sell your 'anonymized' data. Your deepest anxieties, packaged and sold.

Your 5-Step Privacy Checklist Before You Chat

Feeling isn't enough; we need a strategy. Our pragmatist, Pavo, insists on turning anxiety into action. Before you invest your trust in any `ai therapist`, you need to become your own security auditor. Here is the move.

This isn't about fear; it's about control. Here is your checklist for vetting any platform and ensuring your `mental health app security` is a top priority. Do not skip these steps.

Step 1: Scrutinize the Privacy Policy.

Don't just glance at it. Use the 'find' feature (Ctrl+F) and search for key phrases: "sell data," "third parties," "advertising partners." If their language is vague or gives them broad rights to share your information, that is your answer. A trustworthy `ai therapist` will have a clear, human-readable policy that explicitly states they do not sell user data.

Step 2: Verify Encryption Standards.

Look for the term "end-to-end encryption." This is the gold standard, meaning no one—not even the company—can read your messages. Also, check for "at-rest encryption," which protects your data when it's stored on their servers. If they don't mention `data encryption` specifically, assume the worst.

Step 3: Understand Their Anonymization Process.

A good platform will explain how `anonymized user data` is handled. Do they use it for internal machine learning? Do they share aggregated, non-identifiable insights? The more transparent they are about this, the better. If it's a black box, be wary.

Step 4: Create a Digital Firewall.

Never sign up for an `ai therapist` using your real name, primary email address, or social media accounts. Use a pseudonym. Create a new, separate email address used only for that app. This simple step creates a crucial barrier between your therapeutic conversations and your personal identity.

Step 5: Test the Waters.

Don't dive into your deepest trauma on day one. Start with lower-stakes topics. Talk about a frustrating day at work or a minor disagreement. See how the platform feels. This helps you build trust gradually and allows you to walk away with minimal exposure if you spot any red flags. This initial phase helps you answer the question, '`is ai therapy safe` for me?', based on experience, not just promises.

FAQ

1. Is an AI therapist HIPAA compliant?

Usually, no. Most AI therapy and wellness apps are not considered 'covered entities' under HIPAA law. True `hipaa compliant ai` is rare and typically found in clinical settings, not direct-to-consumer apps. Always check their specific claims and privacy policy.

2. Can AI therapy apps sell my data?

Yes, if their terms of service allow it. This is a major concern for `ai therapy data privacy`. Many free or low-cost apps generate revenue by `selling data to third parties`, often after it has been 'anonymized.' Reading the fine print is crucial.

3. What's the difference between encryption and anonymization?

`Data encryption` scrambles your data so it's unreadable without a key, protecting it from hackers. `Anonymized user data` involves stripping personal identifiers (like your name or email) from your chat logs. While both are important for `mental health app security`, anonymized data can sometimes be re-identified.

4. How can I tell if my AI therapist is safe?

Absolute safety is not guaranteed, but you can reduce risk. Look for a clear privacy policy, strong `data encryption`, and a commitment not to sell user data. Start by sharing less sensitive information and never use your real name or primary email address.

References

apa.org — What do your clients need to know about the privacy of mental health apps?