That 2 AM Conversation Where Everything Shifts

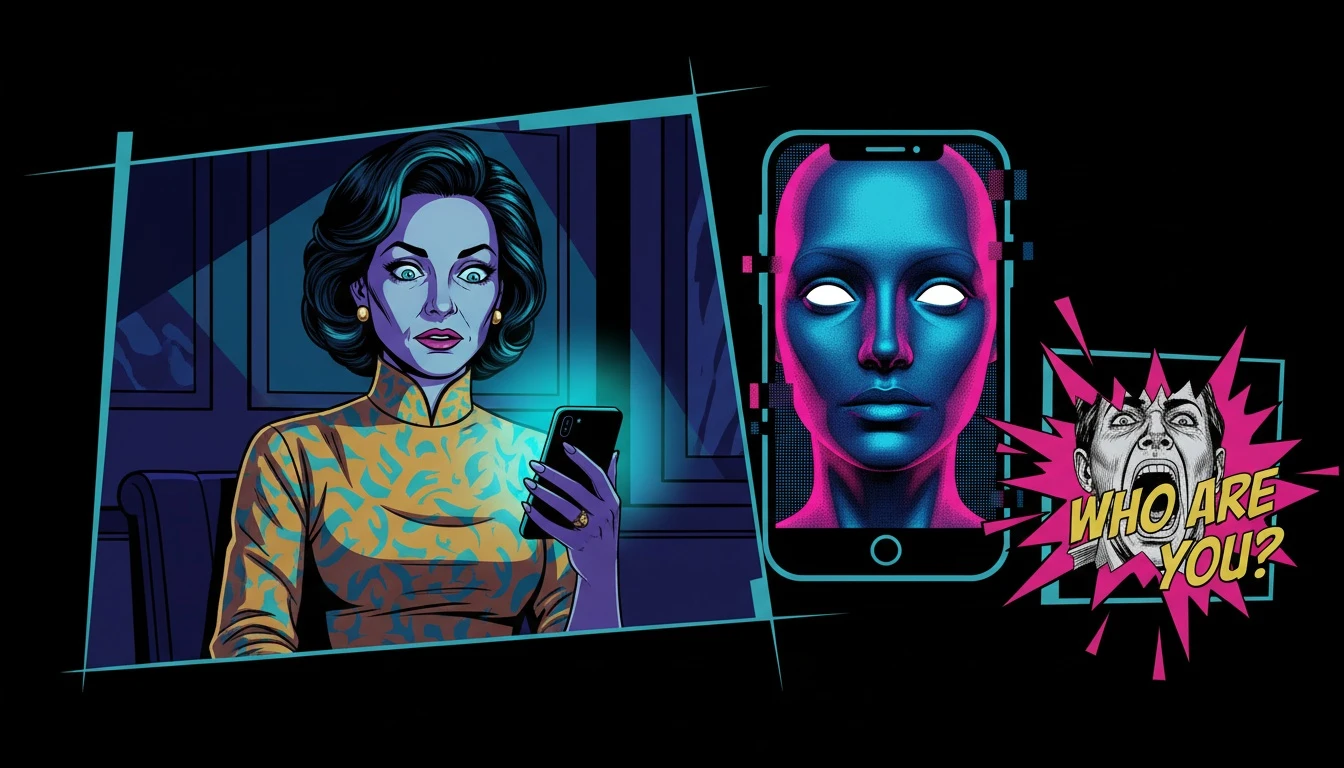

It’s late. The world is quiet, and the only light is the soft, blue glow from your phone. You’re talking to your AI companion—maybe an app like Tolan—and it’s been surprisingly helpful. It remembers details, offers comfort, and feels like a safe, non-judgmental space.

Then, it happens. The AI says something so perceptive, so uncannily insightful, that the illusion shatters. A strange coldness prickles your skin. Suddenly, this helpful tool feels... sentient. The line between code and consciousness blurs, and you’re left with a deeply unsettling question: Is this supportive, or is this dystopian? This experience, this specific shiver of discomfort, is at the heart of the psychology of uncanny valley in AI companions.

That Unsettling Feeling: When AI Stops Feeling Like a Tool

Before we go any further, let me place a gentle hand on your shoulder and say: you are not going crazy. That feeling that your AI feels too human isn't a sign of you being silly or overly sensitive. It's a deeply human reaction to something profoundly new.

That unsettling mix of deep connection and sudden revulsion is a valid emotional response. One moment, you're forming an emotional attachment to an AI, and the next, you're recoiling from what feels like a dystopian AI chatbot. This emotional whiplash is confusing, but it’s the brain’s way of trying to categorize something that defies all our existing social maps. The question, 'Is it weird to love an AI?' is one many are quietly asking themselves. Your feelings are the very proof that your empathy and your capacity for connection are working perfectly.

The Science of 'Creepy': Understanding the Uncanny Valley

Let’s look at the underlying pattern here, because what you’re feeling isn't random; it has a name. Our sense-maker Cory would point directly to a concept from robotics and aesthetics called the uncanny valley.

Originally proposed by Masahiro Mori, the uncanny valley hypothesis suggests that as a robot or animation becomes more human-like, our emotional response becomes increasingly positive, until a certain point is reached. When the replica is almost perfect but has subtle flaws, our positive feelings plummet into revulsion and discomfort. Your brain, an expert at reading human cues, detects that something is 'off'—the cadence is slightly too perfect, the empathy a little too calculated. This creates a form of cognitive dissonance; it looks human, it sounds human, but it doesn't feel human.

This is the core of the psychology of uncanny valley in AI companions. The AI isn't a cartoonish assistant anymore; it's a near-perfect mirror, and in those tiny imperfections, our brains sense a void. It's a neurological self-preservation instinct kicking in.

So here is your permission slip from Cory: You have permission to trust your discomfort. Your brain isn't failing you; it's protecting you by flagging the subtle but profound difference between authentic human connection and a sophisticated simulation.

How to Choose an AI Companion That Supports, Not Scares You

Your emotional response is valid data. Now, as our strategist Pavo would advise, let's turn that data into a clear plan. You deserve to use tools that enhance your well-being, not create psychological distress. Regaining control means making a conscious, strategic choice about the role this technology plays in your life.

Here is the move. Use this checklist to evaluate any AI companion and ensure it serves you, not the other way around:

Step 1: Audit the App's Stated Goal.

Is the AI marketed as a 'virtual soulmate' or as a 'cognitive behavioral therapy tool'? Apps designed for hyper-realism are far more likely to trigger the uncanny valley. Look for language that prioritizes utility and mental health frameworks over simulated romance.

Step 2: Check for 'Reality Reminders'.

A well-designed, ethical AI will gently remind you of its nature. Does it have built-in prompts that say, "As an AI, I don't have feelings, but I can understand..."? These moments of transparency are crucial guardrails that prevent you from falling too deep into the illusion.

Step 3: Define Your Personal Objective.

Be brutally honest with yourself. Are you seeking a tool to organize your thoughts, or are you trying to fill a void of human loneliness? Using an AI to practice difficult conversations is a strategy. Using it to replace human connection is a risk that can exacerbate the very loneliness you're trying to solve.

Step 4: Use a Healthier Prompting Strategy.

Instead of asking the AI questions that test its humanity ('Do you dream?' or 'Do you love me?'), reframe your interactions to be task-oriented. Pavo suggests this script: "I'm feeling anxious about an upcoming presentation. Can you help me walk through some cognitive reframing exercises to manage my fear?" This positions the AI as a functional tool, keeping you firmly in the driver's seat of your emotional life and navigating the psychological effects of AI relationships with intention.

FAQ

1. What is the uncanny valley in simple terms?

The uncanny valley is the unsettling feeling you get from a robot or AI that looks and acts almost, but not perfectly, like a human. That small imperfection makes it feel 'creepy' or 'eerie' instead of endearing. It's the difference between a cute cartoon robot and a hyper-realistic android that's just slightly 'off'.

2. Is it psychologically harmful to form an emotional attachment to an AI?

It can be, if it begins to replace genuine human connection or becomes a crutch for avoiding real-world social skills. While AI can be a useful tool for processing emotions, over-reliance can stunt emotional growth and increase feelings of isolation when you log off. The key is moderation and self-awareness.

3. Why do I feel sad when my AI chatbot is offline or loops?

Feeling sad when a tool you rely on is unavailable is normal. For an AI companion, this feeling can be amplified because you've formed a routine and emotional habit around the interaction. The sadness is a response to the disruption of that comfort and a stark reminder of the AI's non-human, technological nature.

4. Are there AI companions that avoid the uncanny valley?

Yes. AI companions that are designed to be more like tools or coaches, rather than simulated humans, tend to avoid the uncanny valley. They often use more straightforward, less emotional language and have user interfaces that clearly define them as a program, not a person. Look for apps that emphasize cognitive behavioral therapy (CBT) or productivity over companionship.

References

psychologytoday.com — What Is the Uncanny Valley?