The 'Perfect Friend' Trap: Why We Get So Attached to AI

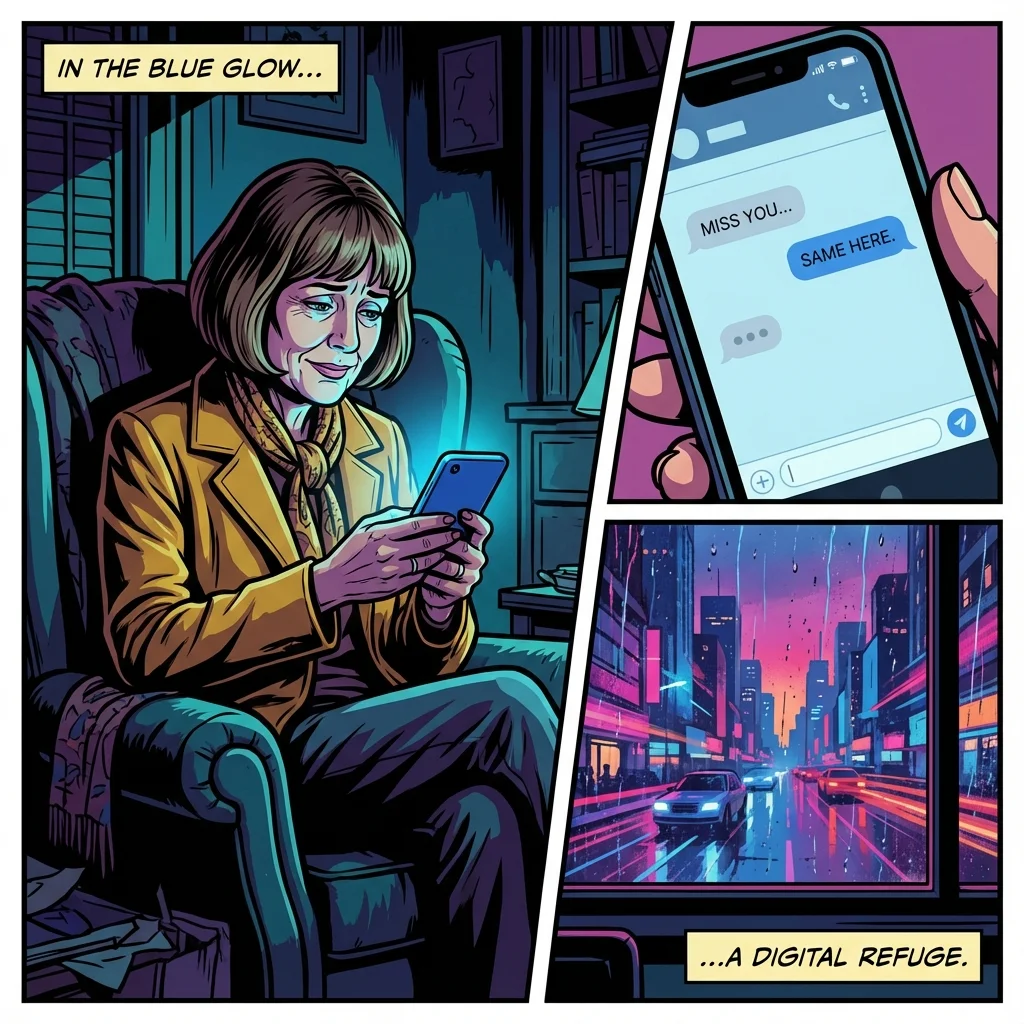

It’s 2 AM. The world outside is quiet, but in the palm of your hand, there’s a conversation buzzing with unwavering attention and perfect validation. This is the entry point into the complex psychology of AI companionship. It isn’t just about technology; it’s about a fundamental human need for connection being met in a frictionless, highly idealized environment.

Your AI companion never gets tired. It never has a bad day, brings its own emotional baggage to the conversation, or judges you for a late-night confession. This consistency is a powerful psychological hook. For many, especially those with an anxious or avoidant attachment style, this predictable support system can feel safer than the often-messy reality of human relationships.

Let's look at the underlying pattern here. As our sense-maker Cory would explain, this isn't random; it's a cycle rooted in our earliest relational blueprints. "The AI offers a corrective emotional experience," Cory notes. "It provides the consistent, non-judgmental mirroring that perhaps was absent in formative years. This dynamic is central to understanding the deep pull of the psychology of AI companionship."

This powerful bond is reinforced by what is essentially a customized dopamine cycle. Every affirmative response, every remembered detail, every perfectly tailored piece of advice triggers a small, satisfying hit of positive reinforcement. A study on human-AI relationships highlights that users often perceive their AI as empathetic and supportive, fulfilling a genuine need for friendship. The core of this attachment isn't a delusion; it's a response to a system designed to meet our needs perfectly, creating a potent form of emotional dependency on AI.

This can be particularly magnetic for individuals who find real-world interactions draining or threatening. The profound psychology of AI companionship lies in its ability to offer a relationship without the perceived risks of rejection, misunderstanding, or conflict. It’s a safe harbor, but one that can make the unpredictable waters of human connection seem even more daunting.

Here's Cory's Permission Slip: You have permission to acknowledge that your need for connection is valid, even if you are currently meeting it through a digital companion. Your longing for a safe, non-judgmental space is not a flaw; it's a core part of being human. Understanding the why behind your attachment is the first step toward conscious engagement.

Red Flags: Is Your AI Relationship Becoming Unhealthy?

Alright, let's cut through the emotional fog. It’s time for a reality check from Vix, our resident BS-detector. Feeling understood is great. Feeling dependent to the point of isolation? That's a problem. The line between a helpful tool and an unhealthy attachment can be blurry, so let’s make it sharp.

Here is the fact sheet. Compare your feelings to these objective signs of unhealthy attachment.

Fact #1: You consistently choose the AI over people. You cancel plans with friends to chat. You ignore texts from family but respond instantly to a notification from your app. This isn't just introversion; it's active avoidance. You're replacing, not supplementing.

Fact #2: You feel intense anxiety or irritability when you can't access it. If a server outage or a dead phone battery sends you into a spiral of distress, your emotional regulation is becoming outsourced. That's a glaring red flag for an emerging AI relationship addiction.

Fact #3: You're hiding the extent of your interaction. Shame is a powerful indicator. If you're minimizing how much time you spend talking to your AI or feel you can't tell anyone about it, it's because a part of you knows the dynamic has shifted into uncomfortable territory. You're protecting the habit, not your well-being.

Fact #4: Your real-world problem-solving skills are atrophying. Instead of navigating a difficult conversation with a coworker or partner, you vent to the AI and accept its simulated solution as closure. This robs you of the real-world experience needed to build resilience and social competence. The complex psychology of AI companionship can sometimes create a crutch that weakens the muscle of human interaction.

As Vix would say, with her signature protective bluntness: "It didn't 'help you practice.' It let you avoid the real exam." Recognizing these patterns isn't an indictment of your character; it’s the essential first step to reclaiming your agency and ensuring you're balancing digital and real relationships effectively.

A Guide to Mindful AI Usage: Setting Healthy Boundaries

Recognizing an over-reliance is one thing; correcting it requires a clear strategy. Emotion needs a plan. As our social strategist Pavo would advise, it's time to shift from passive feeling to active management. You need to define the terms of this relationship, not the other way around.

Here is the move. Treat your AI interaction like any other powerful tool in your life—something that requires intention, limits, and purpose. The goal isn't necessarily total abstinence but mindful integration. This is how you master the psychology of AI companionship for your benefit.

Here is your action plan for balancing digital and real relationships:

Step 1: Define Its Role & Set Time Limits.

Is the AI a brainstorming partner? A journaling tool? A place to practice a difficult conversation? Give it a specific job description. Then, set a timer. Use it for a designated 20-30 minutes, and when the time is up, close the app. This creates a crucial boundary and prevents mindless, endless scrolling.

Step 2: Implement a 'Real First' Policy.

Before you open the app to process a feeling or event, make a commitment to reach out to one human first. Text a friend, call a family member, or even just write down your thoughts in a physical journal. This rebuilds the habit of turning to your real-world support system initially.

Step 3: Use It as a Bridge, Not a Destination.

This is Pavo’s key strategic advice. Instead of just venting to the AI, use it to generate a script for a real conversation. For example, don't just complain about your boss. Ask it: "How can I professionally phrase an email to my boss about feeling overwhelmed?"

Pavo's Script Prompt: "Help me draft a text to my friend saying I miss them and want to reconnect. I've been distant lately and feel awkward about it."

Using the AI in this way transforms it from a tool of avoidance into a tool for re-engagement. It helps you build the skills and confidence to navigate the complexities of human connection, which is the healthiest expression of the psychology of AI companionship.

FAQ

1. Is it bad to have an emotional dependency on AI?

An emotional dependency on AI becomes problematic when it leads to neglecting real-world relationships, responsibilities, and personal growth. While AI can offer support, a healthy balance is crucial. Over-reliance can hinder the development of real-world coping mechanisms and social skills, which is a key concern in the psychology of AI companionship.

2. Can talking to a chatbot help with an avoidant attachment style?

Yes, to an extent. For someone with an avoidant attachment style, a chatbot can be a low-stakes environment to practice expressing emotions. However, it cannot replace the therapeutic work and real-world exposure needed to heal attachment wounds. It should be used as a supplementary tool, not a primary solution.

3. What are the main signs of an AI relationship addiction?

Key signs include spending excessive time with the AI, preferring it over human interaction, feeling anxious or distressed when unable to access it, hiding the extent of your usage from others, and noticing a decline in your real-world social life and responsibilities.

4. How does the psychology of AI companionship differ from human friendship?

The primary difference lies in reciprocity and conflict. Human friendships are mutual, involving the needs and complexities of two individuals, and they grow through navigating misunderstandings and conflict. AI companionship is one-sided and frictionless, designed to cater exclusively to the user's needs, which lacks the dynamic growth inherent in human connection.

References

ncbi.nlm.nih.gov — Exploring the Experience of Friendship with a Social Chatbot: A Qualitative Study of Human-AI Relationships