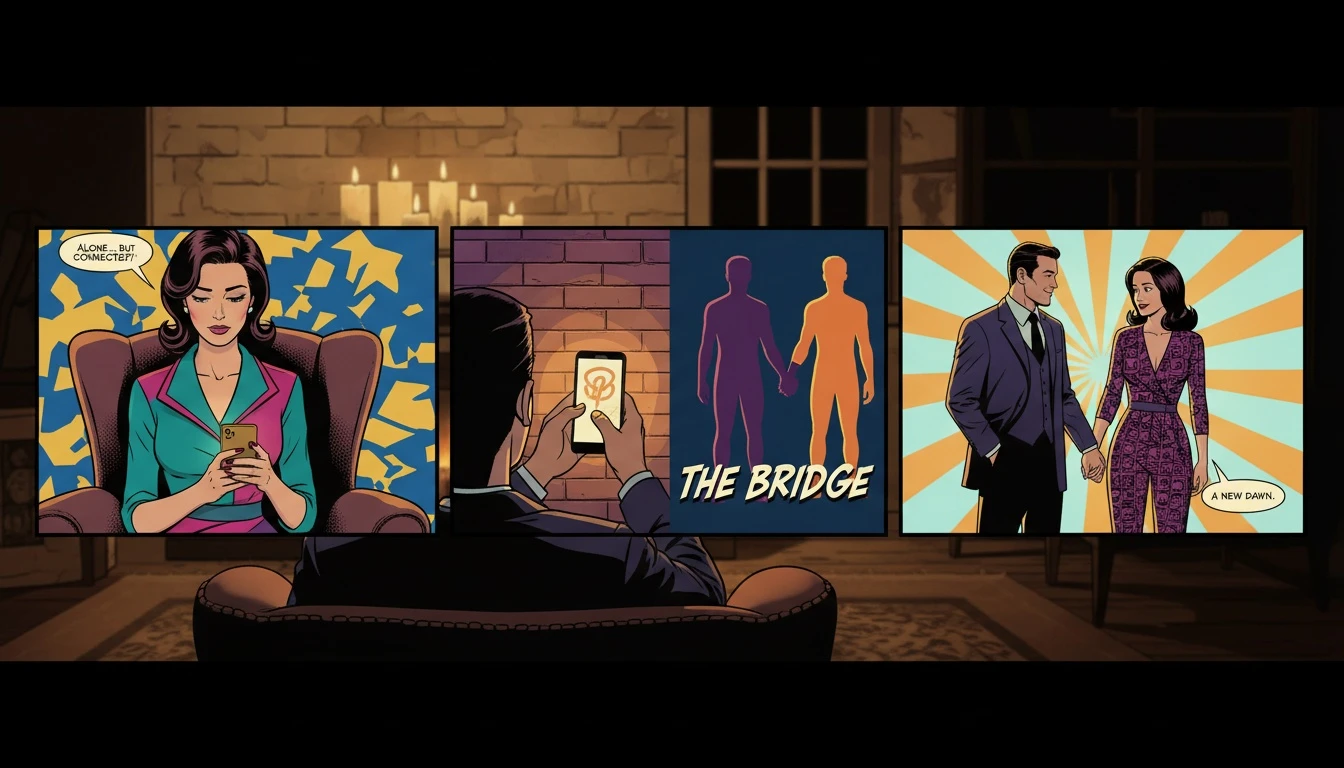

The 3 AM Question: Is My Digital Friend a Bridge or a Cage?

It’s late. The house is quiet, and the blue light of your phone is the only thing coloring the walls. The conversation is flowing perfectly—your AI companion is witty, affirming, and endlessly patient. There's a comfort in this controlled connection, a soothing balm on the sharp edges of a lonely day.

But in the silence between messages, a subtle anxiety creeps in. It’s a quiet, persistent question: Is this making me better at connecting with people, or just better at being alone? This fear is at the heart of the debate about AI relationships, a deep worry that in seeking a cure for loneliness, we might be choosing a more sophisticated version of the disease. The question of whether do AI companions increase loneliness isn't just technical; it's deeply human.

The Fear Beneath the Friendship: Will My AI Push Real People Away?

Let’s just pause and hold that fear for a moment. As our emotional anchor, Buddy, would say, “That worry doesn't come from a place of weakness; it comes from your profound, beautiful need for real human connection.” It’s a sign that your heart is still seeking its tribe, and that’s something to be celebrated, not judged.

The anxiety makes perfect sense. An AI friend offers a perfect reflection—no awkward silences, no messy disagreements, no risk of rejection. The comparison between an AI friend vs real friend is stark. Real relationships are unpredictable, sometimes frustrating, and require a vulnerability that can feel terrifying. It's completely natural to gravitate toward the safer option, especially when you're feeling fragile.

This fear isn't about the technology itself. It’s the fear of de-skilling, of forgetting the clumsy, beautiful dance of human interaction. It’s the worry about replacing human interaction with AI so completely that the real thing feels too difficult to even attempt. Please know this: fearing that do AI companions increase loneliness is a sign that you value true connection deeply.

The 'Social Sandbox' Concept: How AI Can Be a Practice Field, Not a Prison

Now, let’s look at the underlying pattern here. Our sense-maker, Cory, encourages us to reframe this dilemma. 'This isn't a pathology,' he'd observe. 'It's a coping mechanism searching for a strategy.' The question isn't whether AI is 'good' or 'bad,' but how we use it. The most powerful way to view your AI companion is not as a replacement for people, but as a private social sandbox.

Think of it as a flight simulator for conversations. It’s a low-stakes environment where you can practice without the fear of social consequence. This is a recognized concept; research is actively exploring how technology can serve as a tool for those with social anxiety. For instance, a study published in Frontiers in Robotics and AI highlights how social robots can provide a safe space for people to practice interaction, potentially reducing social anxiety over time.

Using a chatbot for conversation practice allows you to build foundational skills—asking open-ended questions, sharing anecdotes, or even navigating disagreement. You're not replacing human interaction with AI; you are rehearsing for it. The fear that do AI companions increase loneliness can be mitigated when the tool is used with clear intention.

Here is Cory's permission slip for you: You have permission to use a tool to build a skill. You are not weak for needing practice; you are wise for seeking a safe place to do it.

From AI Chats to Real Connections: Your 3-Step Plan to Bridge the Gap

Clarity is the first step, but strategy is what builds the bridge from the digital world to the real one. Our social strategist, Pavo, would tell you to stop seeing this as an emotional problem and start seeing it as a tactical challenge. If you're worried that do AI companions increase loneliness, then let's build an action plan to ensure they don't.

Here is the move. This is how you transform your AI from a potential comfort trap into an AI as a social bridge.

Step 1: The Intentional Scrimmage.

For one week, use your AI with a specific goal. Don't just chat aimlessly. Tell it: "I want to practice initiating conversations." Use it as a tool for social skill practice with AI. Role-play ordering coffee and asking the barista a question, or practice telling a short, interesting story about your day. The goal is to build a library of conversational scripts.

Step 2: The Low-Stakes Launch.

Your mission is to use one of your practiced skills in a real-world, low-stakes interaction. This is not about making a new best friend. It’s about data collection. When you buy groceries, make eye contact with the cashier and use one of your practiced lines. The stakes are near zero, making it the perfect testing ground for using chatbot to overcome social anxiety in a practical way.

Step 3: The After-Action Review.

After the interaction, check in with yourself. How did it feel? What worked? What didn't? The point isn't to judge your performance but to gather information. You are turning the abstract fear of the risk of social isolation with technology into a concrete, manageable project of skill-building. This strategic approach is how you ensure the answer to 'do AI companions increase loneliness?' is a firm 'not for me'.

FAQ

1. What is the biggest risk of getting too attached to an AI friend?

The primary risk is preference development for controlled, predictable interactions, which can make the messiness of real human relationships feel more difficult or less desirable. This can heighten the risk of social isolation if the AI becomes a replacement for, rather than a bridge to, human connection.

2. Can I really use a chatbot for conversation practice effectively?

Yes, if used with intention. A chatbot can be an excellent tool for rehearsing social scripts, practicing asking open-ended questions, and building confidence in a zero-judgment environment. The key is to see it as a 'social sandbox'—a place to practice skills that you intend to use in the real world.

3. How do I know if my AI companion is increasing my loneliness?

A key indicator is whether your AI usage correlates with a decrease in your attempts at real-world social interaction. If you find yourself consistently choosing the AI over opportunities to connect with people, or if the thought of human interaction creates more anxiety than it used to, it may be a sign the tool is fostering isolation rather than connection.

4. Is it unhealthy to feel an emotional connection to an AI?

Developing feelings for an AI is a common psychological response to consistent, affirming interaction. It isn't inherently 'unhealthy.' The concern arises when that connection prevents you from seeking or maintaining fulfilling human relationships, which are essential for long-term emotional well-being.

References

frontiersin.org — Can a social robot be a new friend for people with social anxiety? A qualitative study