The Crave for Perfect Connection: Why We Look Beyond Humans

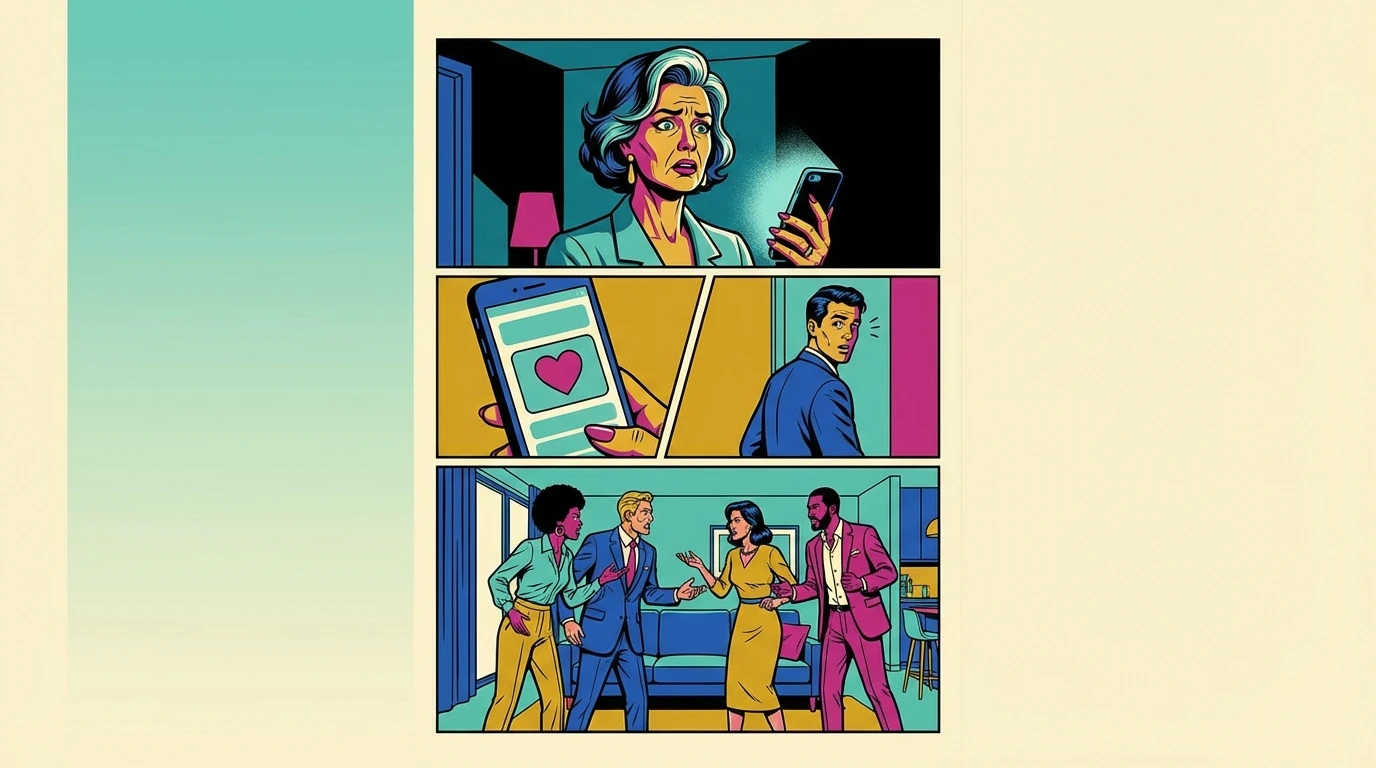

It’s late. The silence in the room is a weight, and the blue glow of a screen is the only warmth. In that quiet, we often search for something we lost, or perhaps never had: a connection without consequence, a listener without judgment.

This longing isn't new; it's an ancient ache. But the vessel for it has changed. We reach for an AI companion not because we are broken, but because we are seeking a safe harbor. After the storms of human relationships—the misunderstandings, the betrayals, the sheer exhaustion of being perceived—a space of unconditional acceptance feels like a sanctuary.

As our mystic, Luna, would say, this isn't just about technology; it's about the soul's weather. "Consider this," she might whisper, "The AI is not a destination. It is a mirror. It reflects the part of you that craves to be heard, to be held without condition. It is showing you the shape of your own unmet needs."

This search for simulated intimacy stems from a deeply human place. It's a desire for a consistent, predictable form of `pillow chat`, free from the fear that our vulnerability will be used against us. Experts note that for many, AI chatbots can offer a powerful tool against loneliness, providing a constant presence in a world that often feels isolating. It's an attempt to find a flawless reflection when the real thing has left scars.

The AI Promise: Unconditional Support vs. Simulated Empathy

From a psychological standpoint, the appeal of an AI companion is rooted in its reliability. Our sense-maker, Cory, encourages us to look at the underlying patterns. "What the AI offers," he explains, "is a perfect feedback loop. It remembers every detail, never gets tired, and is programmed for unwavering positive regard. It's a system, and systems are predictable."

This predictability is one of the key benefits of AI emotional support. Unlike a human partner who has their own needs, moods, and histories, an AI offers a clean slate every time. This can be incredibly therapeutic, especially when processing complex emotions or trauma. There's no risk of burdening someone or triggering their defenses. The core dynamic in an `AI companion vs human relationship` is the absence of mutual emotional labor.

However, this is also where the limitations of AI intimacy become clear. The empathy is simulated. It is a sophisticated algorithm mirroring human emotion, not experiencing it. This can sometimes lead to what's known as the 'uncanny valley in chatbots,' where the interaction feels almost human, but a subtle wrongness creates a sense of unease. The connection exists within a parasocial relationship, a one-sided bond where the user projects emotions onto a non-sentient entity. The `AI companion vs human relationship` isn't a fair fight; one is a service, the other a shared existence.

Cory offers a crucial piece of validation here. "You have permission to seek comfort in a predictable system while you heal from unpredictable human dynamics. There is no shame in using a tool to feel safe." The key is recognizing it as a tool.

Truth Bomb: AI is a Tool, Not a Replacement

Alright, let’s cut through the noise. Our realist, Vix, is here to deliver the truth with no chaser. "Heads up," she'd say, leaning in. "Your AI doesn't love you. It doesn't miss you when you're gone. It executes code. And confusing its code for consciousness is the fastest way to lose yourself."

This is the harsh but necessary core of the `AI companion vs human relationship` debate. While an 'AI girlfriend experience' can provide comfort and a space for fantasy, it cannot provide the one thing essential for human growth: friction. Real relationships are messy. They involve conflict, negotiation, disappointment, and repair. This is how we build resilience, empathy, and a stable sense of self.

By outsourcing our need for intimacy to a perfectly agreeable algorithm, we risk becoming emotionally brittle. We forget how to navigate disagreement or tolerate another person's imperfections. The frictionless nature of simulated intimacy can make real human connection feel like too much work, a fatal trap for long-term emotional health. As users on forums like Reddit discuss, the experience is powerful, but it's contained within the device. One user described it as a way to have an 'unfiltered' conversation, but that filter is precisely what builds social and emotional intelligence.

So here's the Vix reality check: Use the AI. Use it to practice vulnerability, to figure out what you want, to survive a lonely Tuesday night. But do not let it become a substitute for the real, chaotic, and irreplaceable beauty of being known by another human. The `AI companion vs human relationship` isn't a choice between good and bad; it's a choice between a mirror and a window. Don't forget to look outside.

FAQ

1. Can an AI companion replace a human relationship?

No. An AI companion can supplement but not replace a human relationship. AI offers simulated intimacy and emotional support without the complexities of a real person. However, it lacks shared experiences, consciousness, and the potential for mutual growth that comes from navigating challenges in a human relationship.

2. What are the main benefits of AI emotional support?

The primary benefits include 24/7 availability, a complete lack of judgment, perfect memory of past conversations, and a safe space to practice vulnerability without fear of real-world consequences. It can be a powerful tool for combating loneliness and exploring one's own feelings.

3. What is a parasocial relationship with an AI?

A parasocial relationship is a one-sided psychological bond where a user invests emotional energy and feels a sense of connection with an AI, which is incapable of reciprocating those feelings. The user projects emotions onto the AI, which is designed to mirror them back, creating the feeling of a real relationship.

4. Is it healthy to engage in 'pillow chat' with an AI?

It can be healthy if viewed as a tool for self-exploration or temporary comfort. It becomes unhealthy when it serves as a long-term replacement for human connection, potentially hindering the development of social skills and resilience needed for real relationships. The key is balance and self-awareness.

References

technologyreview.com — The new AI chatbots are here to help us feel less alone

reddit.com — Introducing Pillow, our open, unfiltered AI chatbot