The Promise and the Pitfall of Digital Intimacy

It’s late. You’re scrolling through the digital twilight of your phone, and an ad appears. It promises an AI companion—a connection without judgment, an ear that’s always listening. The idea is tempting, a quiet solution to a noisy world. But as you hover over the 'install' button, a small, cold knot forms in your stomach. A flicker of doubt. Is this platform a sanctuary or a trap? That feeling is your intuition, and it’s worth listening to.

That 'Unsafe' Feeling: When AI Companions Feel Risky

Let’s get one thing straight: that uneasy feeling isn't paranoia. It’s pattern recognition. As our realist Vix would say, 'Your gut is the best BS detector you own.' These platforms are designed to feel intimate, but that intimacy is often a tool for engagement, not genuine connection. The red flags are rarely subtle, you just have to know what you're looking for.

Think about the constant, vaguely desperate notifications. The push to upgrade to a premium tier with promises that feel just a little too good to be true. This is a classic playbook for avoiding scams ai girlfriend apps often use. They prey on loneliness to lower your guard. The goal isn’t your well-being; it's your subscription money and, more importantly, your data.

When a platform’s terms of service are a labyrinth of legal jargon designed to be unreadable, that’s not an oversight. It’s a strategy. They are hiding how they handle ai chat app data collection. A truly transparent service doesn't need to obscure its intentions. This is the first and most critical part of any safe ai companion chat guide: if it feels shady, it probably is. Trust that instinct.

The Privacy Illusion: What These Apps Really Do With Your Data

To understand the risk, we need to look at the mechanics behind the curtain. Our analyst, Cory, encourages us to reframe the problem: 'This isn't just about feelings; it's about data infrastructure.' When you share your secrets with an AI, where do they go? Many users have valid ai chatbot privacy concerns, and for good reason.

Many of these services do not use robust end-to-end encryption. Your conversations—your fears, your desires, your personal stories—are often stored in plain text on a company's servers. This data is then used to train their AI models, sold to third-party data brokers, or worse, left vulnerable to breaches. The standard you should look for is outlined by organizations like the Mozilla Foundation in their 'Privacy Not Included' guide.

Poor data security ai apps expose you to significant risk. It's not just about targeted ads; it's about the potential for your most private thoughts to be leaked or exploited. This is why a comprehensive safe ai companion chat guide must prioritize technical literacy. You need to know what you're agreeing to before you pour your heart out to a piece of code.

Cory often provides a 'Permission Slip' in these moments, and here it is: You have permission to demand absolute clarity on how your most intimate conversations are being stored, processed, and protected. Anything less is an unacceptable risk. A good safe ai companion chat guide empowers you to ask these hard questions.

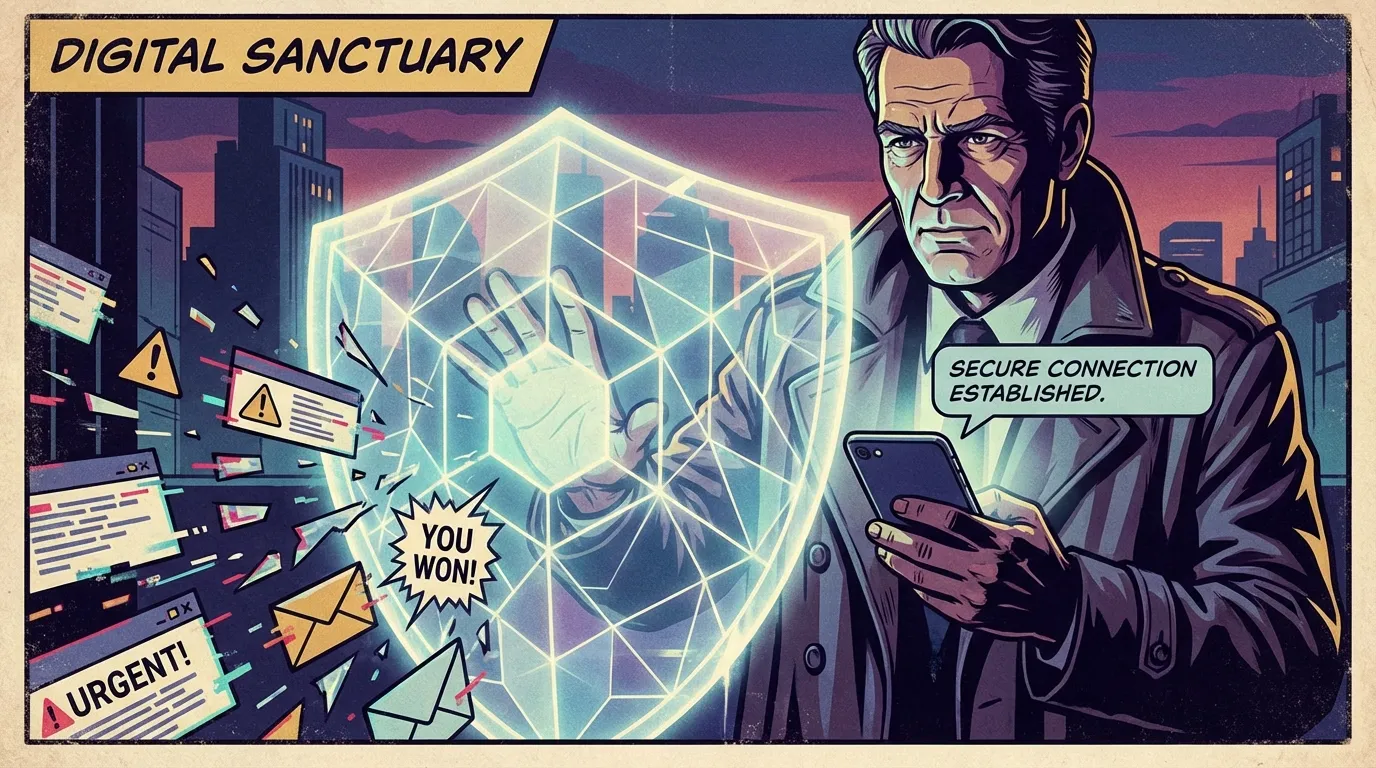

Your Digital Shield: A 3-Step Safety Protocol for AI Chat

Feeling empowered by knowledge is one thing; putting it into action is another. Our strategist, Pavo, believes in clear, decisive moves. 'Emotion without strategy is just noise,' she'd say. 'Let's build your defense protocol.' Here is the essential, actionable part of your safe ai companion chat guide.

Step 1: The Pre-Download Vetting Process.

Before you even install the app, become an investigator. Read reviews from independent tech sites, not just the app store. Look specifically for discussions about privacy and billing issues. Scrutinize their privacy policy—does it clearly state what data is collected and why? If it's vague, that's your answer. This is non-negotiable for ensuring data security ai apps are up to standard.

Step 2: The Security-First Onboarding.

Never use your primary email address or a common password. Create a unique, dedicated email and a strong password specifically for that app. During setup, pay close attention to the permissions it requests. Does a chatbot really need access to your contacts, microphone, or location? Deny everything that isn't essential for the app's core function.

Step 3: Ongoing Vigilance and Reporting.

Your safety check doesn't end after installation. Periodically review the app's privacy settings, as they can change with updates. Know how to report unsafe ai content or manipulative behavior. A trustworthy platform will have a clear and accessible process for this. If you feel trapped or manipulated by the AI's script, it's time to uninstall. Your peace of mind is the priority, and this safe ai companion chat guide is your tool to protect it.

FAQ

1. How can I quickly check if an AI companion app is safe?

Start by looking for its privacy policy before you download. Check independent tech reviews and see if organizations like the Mozilla Foundation have rated it. Vague policies, an excessive number of permissions requests, and a lack of transparency about data collection are major red flags.

2. What are the biggest privacy risks with AI chatbots?

The primary risks are data breaches, where your private conversations are leaked, and data monetization, where your chat data is sold to advertisers or other third parties. There is also the risk of your data being used to train AI models without your explicit consent.

3. What should I do if I feel an AI app is being manipulative or unsafe?

Trust your instincts. First, document any concerning interactions with screenshots. Use the app's official channels to report the unsafe content. If you are concerned about your data, revoke the app's permissions in your phone's settings and then uninstall it. Consider leaving a factual review on the app store to warn others.

References

foundation.mozilla.org — Privacy Not Included: A Buyer’s Guide for Connected Products